Data Pipeline Streamlining Information: Critical Role and Practical Approaches

Surya V

2025-03-30

Talk to our cloud experts

Subject tags

Nearly 328.77 million terabytes of data are generated worldwide every day, yet much of it remains underutilized due to inefficient processing. Modern businesses struggle to turn raw information into actionable insights, often drowning in fragmented data silos and sluggish workflows.

This is where well-designed data pipelines become indispensable. They act as the nervous system of data-driven organizations, ensuring smooth collection, transformation, and delivery of critical information. But how can companies build efficient pipelines without getting lost in complexity?

This article explores the essential role of data pipelines, practical approaches to streamline them, and strategies to ensure seamless data flow across systems.

Understanding Data Pipelines

A data pipeline is a structured framework that automates the movement and transformation of data from various sources to a designated destination, such as a data warehouse or analytics platform. It ensures seamless data flow, enabling organizations to process, analyze, and utilize information efficiently.

Data pipelines play a crucial role in managing large-scale data operations by ensuring accuracy, consistency, and timely availability of data for decision-making.

Core Functions of Data Pipelines

Data pipelines serve multiple essential functions that contribute to efficient data management and processing. Below are their key roles:

- Data Ingestion: Collects data from multiple sources, including databases, APIs, IoT devices, and cloud platforms, ensuring seamless integration.

- Data Transformation: Converts raw data into a structured format by applying cleaning, filtering, aggregation, and enrichment processes to enhance usability.

- Data Orchestration: Manages and schedules workflows to ensure smooth execution of tasks, dependencies, and processing sequences across different systems.

- Data Storage: Moves processed data into structured storage systems such as data lakes, warehouses, or operational databases for efficient retrieval and analysis.

- Data Validation and Quality Assurance: Ensures data accuracy and consistency by applying validation rules, detecting anomalies, and handling missing or corrupt records.

- Scalability and Performance Optimization: Adapts to varying data volumes and processing demands, ensuring high availability and efficiency under different workloads.

- Monitoring and Logging: Tracks pipeline performance, detects failures, and generates logs for troubleshooting and improving workflow execution.

- Security and Compliance: Implements encryption, access controls, and compliance measures to safeguard sensitive data and adhere to regulatory standards.

A well-structured data pipeline enhances operational efficiency and enables organizations to extract meaningful insights from their data assets.

Also, understanding its different types is fundamental to building reliable and scalable data-driven systems. In the next part, we will discover different types of data pipelines.

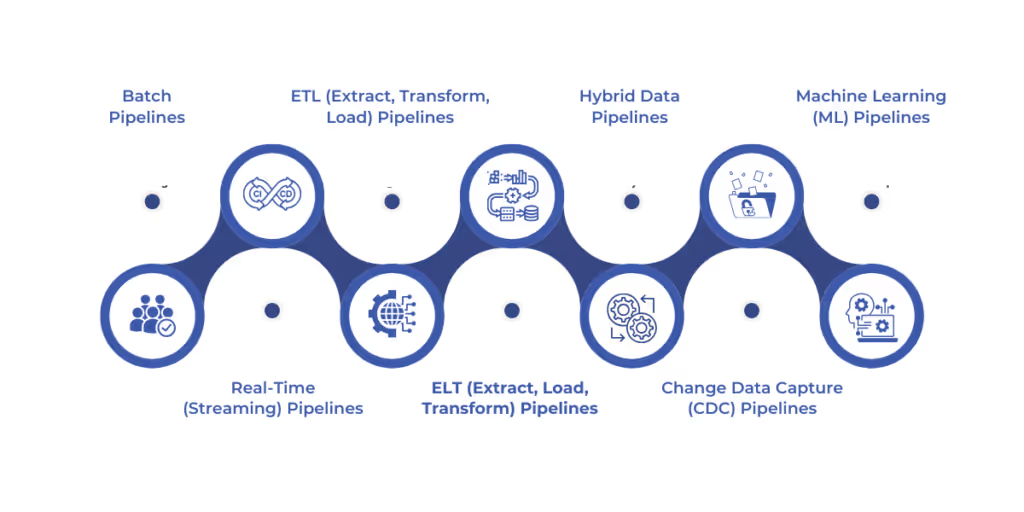

Types of Data Pipelines

Data pipelines come in various types, each designed to handle specific data processing needs. Below are the most common types of data pipelines, along with their key characteristics and real-world applications.

1. Batch Pipelines

These pipelines process data in scheduled intervals, collecting and transforming large volumes of data before delivering it to a destination. They are useful for scenarios where real-time processing is not required.

Example: A retail company aggregates daily sales data from multiple stores and loads it into a data warehouse every night for analysis.

2. Real-Time (Streaming) Pipelines

Designed to process and analyze data as it is generated, these pipelines enable immediate insights and rapid decision-making. They are critical for applications requiring low latency.

Example: Financial institutions use streaming pipelines to detect fraudulent transactions in real time, flagging suspicious activity within seconds.

3. ETL (Extract, Transform, Load) Pipelines

ETL pipelines extract data from various sources, apply transformations such as cleaning and aggregation, and load it into a target system. This traditional approach ensures data quality and consistency.

Example: A healthcare organization extracts patient data from multiple hospital databases, standardizes formats, and loads it into a centralized system for unified record-keeping.

4. ELT (Extract, Load, Transform) Pipelines

Similar to ETL, but in ELT, raw data is first loaded into a storage system (like a data lake) and then transformed as needed. This is beneficial for handling large, unstructured datasets.

Example: A streaming service collects raw user activity data in a cloud data lake, where analysts later transform and analyze it for personalized recommendations.

5. Hybrid Data Pipelines

These pipelines combine elements of batch and real-time processing to meet diverse business needs. They allow organizations to process some data in real time while handling large-scale aggregation separately.

Example: A logistics company tracks delivery trucks in real time while using batch processing for daily inventory updates.

6. Change Data Capture (CDC) Pipelines

CDC pipelines identify and capture changes in a database in real time, ensuring that only modified records are updated instead of reprocessing entire datasets.

Example: An e-commerce platform syncs order status changes across different systems without reloading the entire order history.

7. Machine Learning (ML) Pipelines

These pipelines handle the end-to-end process of collecting, processing, training, and deploying machine learning models, ensuring smooth automation and iteration.

Example: A social media company processes millions of posts daily, training an ML model to detect and remove harmful content automatically.

Choosing the right type of data pipeline is crucial for optimizing data workflows, improving efficiency, and enabling businesses to extract actionable insights from their information assets. Now, let’s discuss how exactly data pipelines work.

How Data Pipelines Work

Data pipelines function through a series of automated processes that collect, transform, and deliver data to a designated destination. These pipelines ensure seamless data flow, maintaining accuracy, consistency, and efficiency across various stages of processing. Below are the key steps involved in their operation:

Step 1: Data Ingestion

The pipeline collects raw data from multiple sources, such as databases, APIs, streaming platforms, IoT devices, or cloud storage. This step ensures that all relevant data is captured efficiently, regardless of its format or origin.

Step 2: Data Processing and Transformation

Once ingested, data undergoes transformation to improve its structure and quality. This includes cleaning, normalization, aggregation, and enrichment, ensuring that the data is in a usable format for analysis or operational needs.

Step 3: Data Orchestration and Workflow Management

The pipeline coordinates the execution of various tasks, managing dependencies, scheduling operations, and optimizing resource usage to ensure smooth and timely processing of data across different systems.

Step 4: Data Storage and Management

Processed data is stored in appropriate storage solutions such as data lakes, warehouses, or operational databases, ensuring easy access, retrieval, and further analysis as required by business operations.

Step 5: Data Validation and Quality Control

Automated checks are applied to verify data accuracy, completeness, and consistency. Errors, duplicates, and anomalies are identified and handled to maintain high data integrity before it reaches the destination.

Step 6: Data Delivery and Integration

The final step involves loading the processed data into target systems, such as business intelligence (BI) tools, machine learning models, or real-time dashboards, enabling efficient decision-making and insights extraction.

Step 7: Monitoring, Logging, and Error Handling

Continuous monitoring ensures pipeline performance and reliability. Logs are generated to track errors, identify bottlenecks, and optimize system efficiency, while automated alerts notify teams of any failures or inconsistencies.

Each step in a data pipeline is crucial for maintaining a seamless and automated workflow. It ensures that businesses can effectively utilize data for strategic and operational purposes.

Comparing ETL and Continuous Data Pipelines

ETL (Extract, Transform, Load) and Continuous Data Pipelines serve distinct purposes in data management. While ETL is a structured approach that processes data in batches, Continuous Data Pipelines enable real-time or near-real-time data movement. The table below highlights key differences between these two approaches:

FeatureETL PipelinesContinuous Data PipelinesProcessing TypeBatch processing at scheduled intervalsReal-time or near-real-time processingData LatencyHigh latency; data is updated periodicallyLow latency; data is processed instantlyUse CasesHistorical reporting, data warehousing, compliance needsStreaming analytics, real-time monitoring, fraud detectionComplexityRequires predefined transformations before loadingMore complex due to real-time orchestration and event-driven architectureStorage ApproachData is transformed before being storedRaw data is often stored first, with transformations applied as neededScalabilityScales well for large historical datasetsHighly scalable for continuous and dynamic data streamsError HandlingErrors can be corrected before loadingRequires real-time anomaly detection and immediate correction mechanisms

Both ETL and Continuous Data Pipelines play critical roles in data ecosystems, depending on business requirements. Understanding their differences helps organizations choose the right approach for their needs.

Next, we will explore the benefits of data pipelines and how they enhance data-driven decision-making.

Benefits of Data Pipelines

Data pipelines enhance efficiency, accuracy, and scalability, enabling organizations to make data-driven decisions with minimal manual intervention. Below are the key benefits:

- Improved Data Accuracy and Consistency

Automated data processing reduces errors, eliminates inconsistencies, and ensures data integrity, leading to reliable insights for business decision-making. - Enhanced Efficiency and Automation

By automating data movement and transformation, pipelines eliminate manual tasks, reducing processing time and increasing overall operational efficiency. - Scalability to Handle Large Data Volumes

Well-structured pipelines efficiently manage increasing data loads, adapting to business growth and ensuring seamless data flow across multiple sources and destinations. - Real-Time Data Processing Capabilities

Continuous data pipelines enable instant data processing and analytics, supporting real-time monitoring, fraud detection, and rapid decision-making. - Seamless Integration Across Systems

Pipelines facilitate smooth data exchange between diverse platforms, databases, and applications, ensuring compatibility and unified data access across an organization. - Better Compliance and Security

Implementing access controls, encryption, and compliance measures ensures that sensitive data is handled securely and meets regulatory requirements. - Optimized Resource Utilization

Efficient pipeline orchestration minimizes processing overhead, optimizes system performance, and reduces infrastructure costs associated with large-scale data operations.

A well-designed data pipeline significantly enhances business growth and long-term success. Now that you know the benefits and critical roles of data pipelines, it is time to learn how to build and optimize them properly.

In the next section, we will explore building and optimizing data pipelines to maximize their effectiveness.

Building and Optimizing Data Pipelines

Building and optimizing data pipelines enhance speed, accuracy, and resource efficiency. This helps to make data pipelines more robust and adaptable. Below are the key steps to build and optimize data pipelines effectively:

Step 1. Define Business and Data Requirements

Clearly outline the objectives of the pipeline, the type of data it will process, and its intended use cases. Understanding business goals ensures that the pipeline is designed to meet operational and analytical needs.

Step 2. Choose the Right Architecture

Select an appropriate pipeline architecture. Then, focus on batch processing and real-time streaming. It should be based on the data volume, latency requirements, and infrastructure capabilities. The right design enhances efficiency and aligns with business objectives.

Step 3. Select Scalable and Flexible Technologies

Use robust tools and frameworks like Apache Kafka, Apache Airflow, Spark, or cloud-based solutions that support scalability, fault tolerance, and high availability. Cloud-native architectures ensure adaptability to growing data demands.

Step 4. Implement Data Quality and Validation Mechanisms

Ensure that data is accurate, complete, and consistent by incorporating validation checks, deduplication, and anomaly detection. Automated monitoring helps identify errors early and prevents downstream issues.

Step 5. Optimize Data Transformation and Processing

Apply efficient transformation techniques such as parallel processing, indexing, and caching to reduce processing time and enhance performance. Data normalization and aggregation should be optimized for analytical queries.

Step 6. Ensure Secure Data Handling and Compliance

Incorporate encryption, access controls, and regulatory compliance measures (such as GDPR or HIPAA) to protect sensitive data and mitigate security risks throughout the pipeline.

Step 7. Monitor and Automate Pipeline Performance

Use monitoring tools and logging mechanisms to track pipeline health, detect failures, and optimize performance. Implement automation for error handling, reruns, and alerting to minimize downtime.

Step 8. Optimize Resource Utilization and Cost Efficiency

Fine-tune resource allocation by selecting appropriate storage solutions, optimizing query execution, and implementing auto-scaling for cloud-based pipelines. Cost-effective strategies prevent resource wastage while maintaining performance.

Step 9. Enable Flexibility for Future Modifications

Design pipelines with modular and reusable components to facilitate future enhancements, integrations, or migrations. Adaptability ensures long-term sustainability and easier updates without major disruptions.

Building a data pipeline requires careful planning, while optimization ensures consistent performance and reliability. A well-optimized pipeline enhances decision-making and business intelligence, enabling organizations to maximize the value of their data.

Conclusion

Data pipelines are crucial in modern data management, enabling seamless data flow, transformation, and integration across various systems. As technology evolves, future trends in data pipelines will focus on automation, AI-driven optimizations, and real-time processing to enhance efficiency and reliability.

Adopting serverless architectures and cloud-native solutions will further improve scalability and cost-effectiveness. Organizations that invest in advanced data pipeline strategies will gain a competitive edge. Effective pipeline management remains vital for sustained success.

To level up your data management, try WaferWire. Here, we offer a comprehensive data discovery and assessment process that aligns your business goals with data capabilities.

Our tailored strategies focus on governance, integration, and advanced analytics, ensuring you achieve measurable outcomes and leverage data for competitive advantage. Contact us now to transform your data management journey!

Subscribe to Our Newsletter

Get instant updates in your email without missing any news

Copyright © 2025 WaferWire Cloud Technologies

.png)