AI-Driven Cyberattacks: How US Companies Can Stay Secure

Sai P

6th Sept 2025

Talk to our cloud experts

Subject tags

How can US companies protect themselves from the growing threat of AI-driven cyberattacks? As artificial intelligence (AI) advances, cybercriminals are increasingly using it to carry out more sophisticated, faster, and harder-to-detect attacks.

AI and machine learning (ML) are being applied to automate and personalize cyberattacks, creating a new level of risk for businesses.

This article will explore the types of AI-enabled cyberattacks and provide practical steps for companies to strengthen their defenses.

Key Takeaways:

- AI-driven cyberattacks are evolving, with cybercriminals using AI to automate and personalize attacks, making them faster and harder to detect.

- Common types of AI-enabled attacks include AI-driven phishing, deepfake technology, and adversarial AI.

- Financial risks are escalating, with US businesses seeing an average cost of $254,445 per cyber incident, with potential damages reaching $10.5 trillion globally by 2025.

- Proactive defense strategies like employee training, real-time monitoring, and zero trust architecture are critical to staying ahead of AI-driven threats.

- AI-powered technologies such as anomaly detection, hybrid AI models, and behavioral AI are emerging as essential tools in combating these threats.

Understanding AI-Enabled Cyberattacks

AI-driven cyberattacks enhance traditional techniques through automation and data analysis, allowing attackers to execute more effective and harder-to-detect attacks. Here's how:

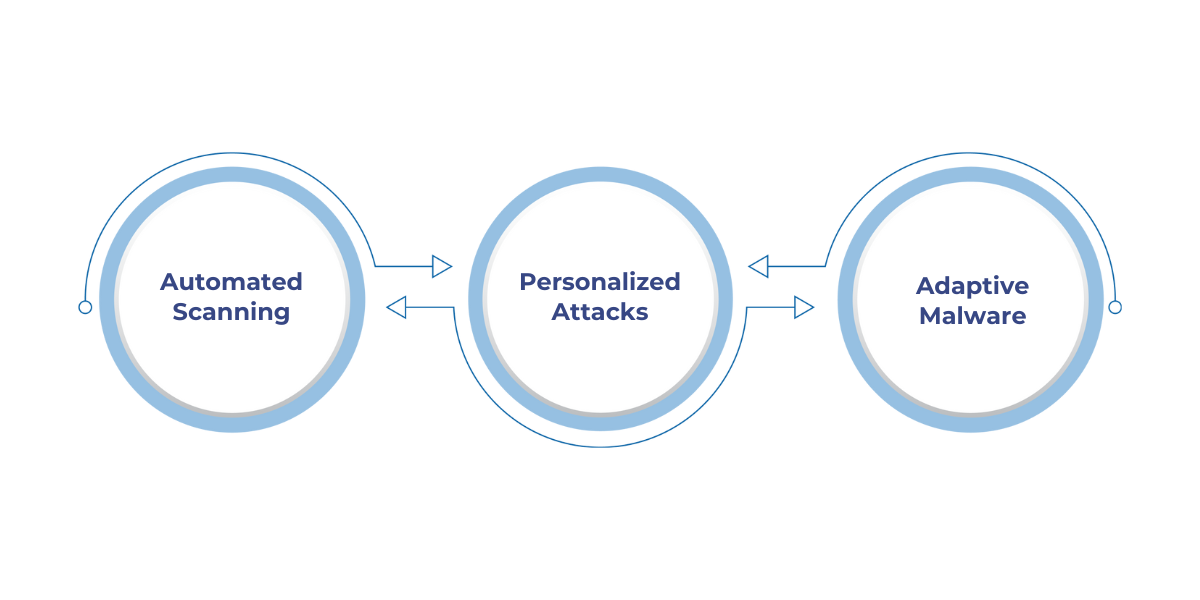

1. Automated Scanning

AI tools enable attackers to scan networks for vulnerabilities rapidly. These tools can perform actions like scanning open ports, scraping data, and running social engineering attacks using chatbots.

The speed and scale of automation allow them to find weak points much faster than manual methods.

2. Personalized Attacks

By analyzing publicly available data, AI allows attackers to craft highly targeted phishing emails and tailored social engineering tactics. These personalized messages are far more convincing, increasing the likelihood of success.

3. Adaptive Malware

AI-driven malware can modify its behavior in real-time, adapting to an organization's defense mechanisms. This ability to alter its code makes detection difficult, allowing the malware to bypass traditional security tools and remain undetected.

Also Read: Safeguarding Sensitive Information: The Power of AI-Driven Document Redaction and Data Privacy

With this understanding, let’s take a closer look at the common types of AI-enabled cyberattacks that are becoming prevalent.

Common Types of AI-Enabled Cyberattacks

AI is enhancing cyberattacks, making them more advanced and harder to detect. Here are the most common types of AI-enabled cyberattacks that businesses need to be aware of:

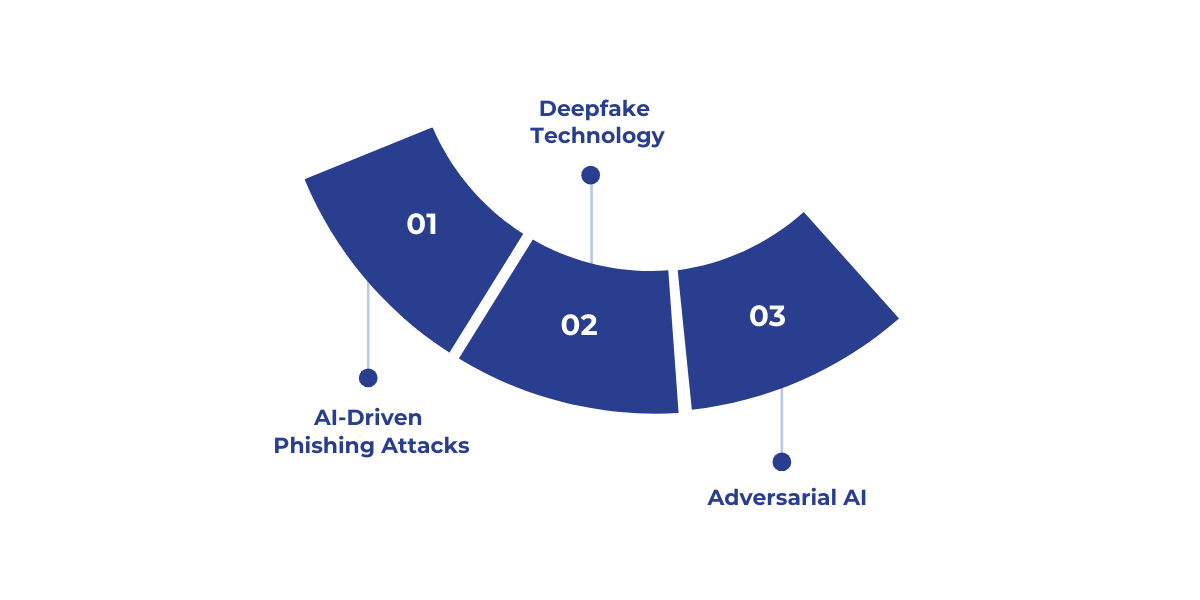

1. AI-Driven Phishing Attacks

AI is taking phishing attacks to a new level by using natural language processing (NLP) and machine learning to craft highly personalized and convincing emails.

2. Deepfake Technology

AI-powered deepfake technology manipulates audio, video, and images to impersonate individuals, often executives.

This can trick employees into authorizing fraudulent transactions, disclosing sensitive information, or committing security breaches. Deepfakes exploit trust, making traditional fraud detection less effective.

- Growing Threat: Deepfakes are increasingly used in phishing and business email compromise attacks, raising the risk of misinformation and deception.

- Usage in Cybercrime: Attackers use deepfakes in social engineering campaigns to bypass security and gain unauthorized access to company assets.

3. Adversarial AI

Adversarial AI manipulates training data or inputs to bypass detection systems, using techniques like poisoning attacks and evasion tactics. This allows attackers to deceive AI-driven security systems into making incorrect decisions, rendering traditional defenses ineffective.

- Continuous Adaptation: Adversarial AI evolves in real-time, using automated testing and machine learning refinements to adapt and evade detection.

Furthermore, the growing threat of AI-enabled cyberattacks brings significant financial and operational risks, which underscores the need for strong defenses.

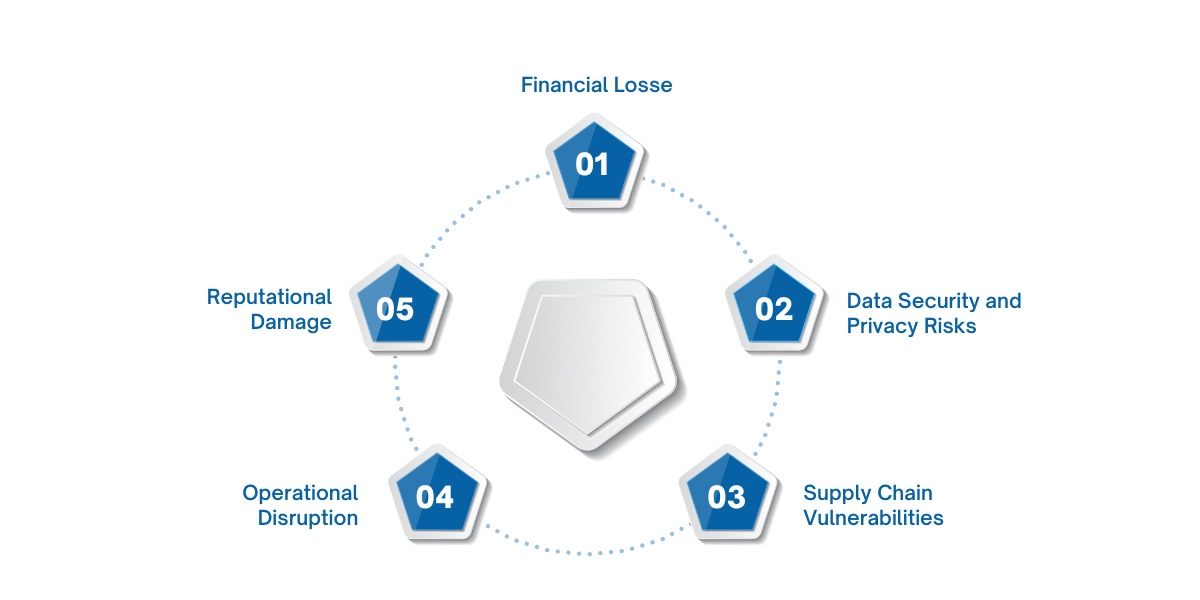

Impact of AI-Enabled Cyberattacks on Companies

AI-enabled attacks are more than just a technical issue, they pose significant financial and operational risks as well such as:

- Data Security and Privacy Risks: AI allows attackers to craft phishing emails that closely mimic legitimate communications. This increases the risk of data theft, compromising sensitive information across industries.

- Supply Chain Vulnerabilities: AI-driven attacks can extend beyond traditional IT systems, targeting operational technology and IoT devices. The interconnectedness of modern businesses makes them more susceptible to cascading effects, impacting entire supply chains.

- Operational Disruption: AI attacks, especially those targeting critical infrastructure, can disrupt business operations. For instance, an AI-induced system outage could halt production lines or delay logistics in industries like manufacturing or healthcare.

- Reputational Damage: Even after remediation, companies face long-term damage to their reputation. Over half of customers may sever ties with businesses after a data breach, especially if they believe sensitive data has been compromised.

To effectively safeguard against these threats, businesses must adopt proactive defense strategies, which we'll explore next.

Proactive Defense Strategies for AI-Driven Threats

To effectively defend against AI-driven cyber threats, organizations need a proactive approach that combines continuous monitoring, employee training, and collaboration. The following table outlines the key strategies:

Furthermore, emerging technologies, particularly AI-powered solutions, are playing a crucial role in enhancing cybersecurity defenses.

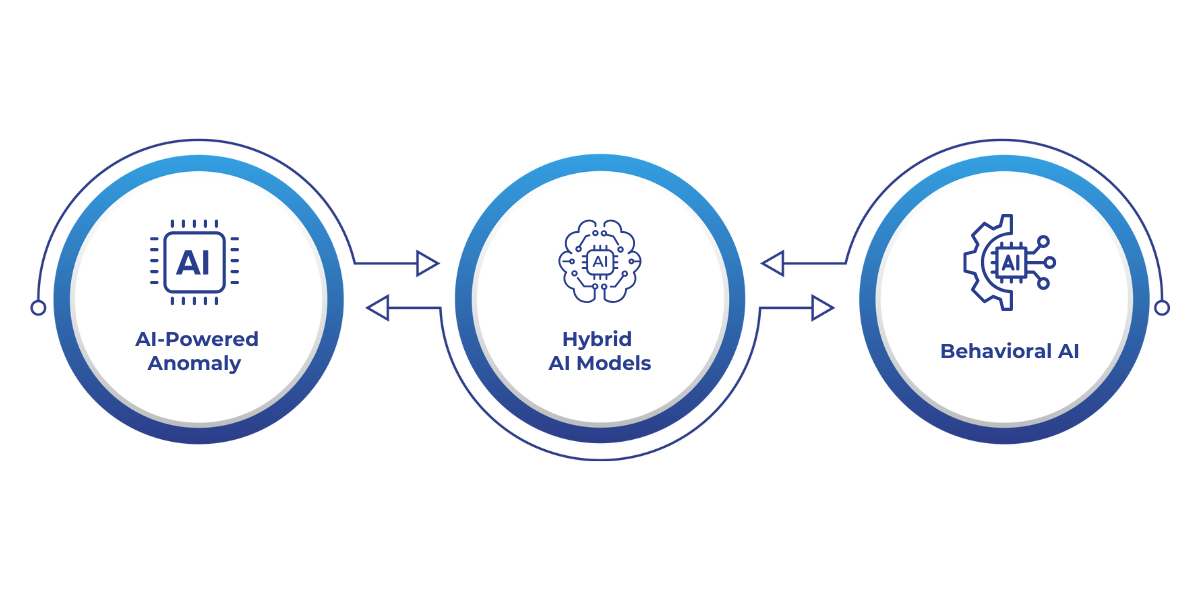

Emerging Technologies and Approaches for Cyber Defense

AI's dual role as both an enabler of attacks and a defensive tool has led to the rise of innovative solutions aimed at enhancing cybersecurity defenses.

1. AI-Powered Anomaly Detection Systems

Modern AI systems leverage unsupervised machine learning to monitor network traffic and detect deviations from normal behavior. These systems are particularly effective at spotting previously unknown threats, such as zero-day exploits.

- Impact: Enhanced detection and reduced false positives, enabling faster identification of real threats.

2. Hybrid AI Models for Threat Detection

Combining Convolutional Neural Networks (CNNs) and Generative Adversarial Networks (GANs) allows for highly accurate anomaly detection with minimal false alarms.

These hybrid models are increasingly used to enhance detection rates in complex environments like cloud infrastructures.

- Action: Integrate these hybrid AI models to improve security operations center (SOC) capabilities, enabling automatic investigations and alerts.

3. Behavioral AI

Unlike traditional signature-based systems, behavioral AI continuously assesses user and device actions in real time, spotting deviations that might indicate a compromise.

This allows for the early detection of threats that would typically evade detection by conventional methods.

- Action: Deploy behavioral AI to monitor internal activities and prevent insider threats or compromised accounts from causing harm.

Strategic Responses to AI-Driven Cyber Threats

To effectively address this rising danger, organizations must adopt a holistic approach that combines cutting-edge technology, streamlined processes, and a well-informed workforce.

1. Skill Development

2. Cross-Sector Collaboration

Collaboration between the public and private sectors can help develop unified AI security standards. Sharing intelligence and best practices can improve the collective defense against emerging AI threats.

3. Incident Response Planning

A well-developed incident response plan, including predefined playbooks for AI-driven attack scenarios, is crucial. Testing and refining these plans through regular exercises ensures that organizations are prepared for rapid, coordinated responses to potential breaches.

Also Read: Understanding Automated Incident Response and Its Tools

Conclusion

Cyberattacks are becoming increasingly advanced, posing a major threat to US companies. As these attacks become more complex, businesses must adopt proactive defense strategies.

By implementing strong security measures, such as advanced monitoring, real-time threat detection, and ongoing risk assessments, companies can stay ahead of cybercriminals and protect their operations.

At WaferWire, we specialize in providing AI-driven cloud security solutions tailored to your organization's needs. Our services help businesses streamline their compliance, optimize operational resilience, and protect against emerging threats.

Contact us today to learn how our innovative cloud services can strengthen your security posture.

FAQs

Q: How can companies effectively train employees to spot AI-driven cyber threats?

A: Training programs should include simulated phishing attacks, deepfake identification, and awareness of AI-based social engineering tactics. Regular training sessions and gamification can help employees stay sharp and recognize new threats.

Q: How does AI impact the cost of compliance for businesses?

A: AI can significantly reduce the cost of compliance by automating risk monitoring, real-time updates on regulatory changes, and reducing human error. This leads to better resource allocation and lowers overall compliance expenses.

Q: What role does AI play in detecting new types of cyberattacks?

A: AI’s ability to analyze large volumes of data in real time helps detect emerging cyber threats that might go unnoticed by traditional methods. AI models continuously learn, improving their ability to spot novel attack patterns.

Q: How do AI-driven attacks affect small to mid-sized businesses compared to larger companies?

A: Smaller businesses often lack the advanced defenses of larger organizations, making them more vulnerable to AI-driven attacks. These businesses face higher relative costs of security breaches due to limited resources and expertise.

Q: What are the risks of ignoring AI-driven cybersecurity measures?

A: Ignoring AI-driven cybersecurity increases the likelihood of falling victim to sophisticated attacks that traditional methods can’t defend against. This could lead to financial losses, data breaches, and significant reputational damage.

Subscribe to Our Newsletter

Get instant updates in your email without missing any news

Copyright © 2025 WaferWire Cloud Technologies

.png)