Data Transformation in Microsoft Fabric: Best Practices Guide

Murthy

8th Oct 2025

Talk to our cloud experts

Subject tags

Frustrated with valuable data trapped in isolated systems, slowing decisions and growth? Organizations with integrated data are more likely to gain new customers. Microsoft Fabric makes this effortless, unifying engineering, storage, and analytics in a single platform with built-in ingestion, transformation, security, and governance.

This guide covers best practices for data transformation in Microsoft Fabric to help you scale efficiently, ensure accuracy, and maximize the value of your data.

Key Takeaways

- Automate ETL workflows and optimize storage to ensure fast, accurate, and scalable data transformation in Microsoft Fabric.

- Standardize and cleanse data while enforcing role-based security for reliable insights.

- Address challenges like inconsistent schemas, large datasets, and latency to improve reporting and decision-making in data transformation in Microsoft Fabric.

- Align data transformation with business goals to enable smarter analytics, AI initiatives, and forecasting.

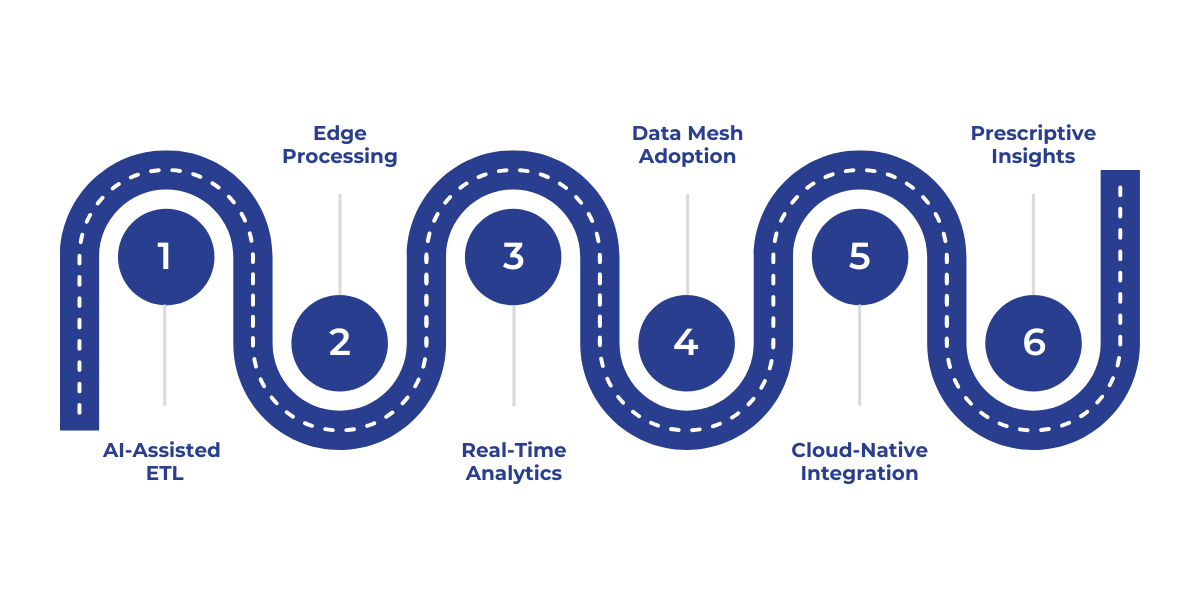

- Adopt trends like AI-assisted ETL, edge processing, real-time analytics, and data mesh for competitive advantage.

Before implementing best practices, it’s essential to understand the business goals behind data transformation, so you know what success looks like before implementing solutions.

Key Business Goals of Data Transformation in Fabric

Data transformation in Microsoft Fabric ensures raw data aligns with your organization’s objectives. It converts fragmented inputs from multiple systems into structured, actionable outputs, supporting smarter business decisions.

Key goals include:

- Structuring Raw Data: Convert unstructured inputs into analysis-ready formats, like cleaning sales logs or IoT sensor data, ensuring consistency across workflows.

- Creating Unified Analytics Models: Standardize data from different sources, such as integrating CRM and ERP systems, to deliver a single, reliable view of business performance.

- Optimizing Performance: Improve query speed and storage efficiency using techniques like partitioning or compacting large datasets, for example, historical transactions.

- Supporting Timely Reporting: Enable accurate KPIs and dashboards, like monthly revenue summaries or real-time inventory reports, to facilitate quick decision-making.

Once the goals are clear, it’s important to see how proper transformation directly influences analytics and decision-making.

Impact of Data Transformation on Analytics

Data transformation is critical to realizing the full potential of analytics in Microsoft Fabric. Without it, raw data remains fragmented, inconsistent, and unreliable, which can lead to flawed insights and poor business outcomes.

When implemented effectively:

- Reliable KPIs and Dashboards: Standardized data ensures accurate metrics, such as precise sales dashboards, giving stakeholders confidence in decisions.

- Preparing Training Data for AI/ML: Clean, structured datasets power AI integration, including predictive modeling like customer churn forecasting, recommendation engines for personalized product suggestions, and automation workflows.

- Enhancing Forecasting and Decisions: Consistent data improves forecasting, for instance, predicting sales trends, enabling leadership to make informed, strategic business decisions.

To achieve reliable insights, we now explore the core best practices that make Microsoft Fabric data transformation effective.

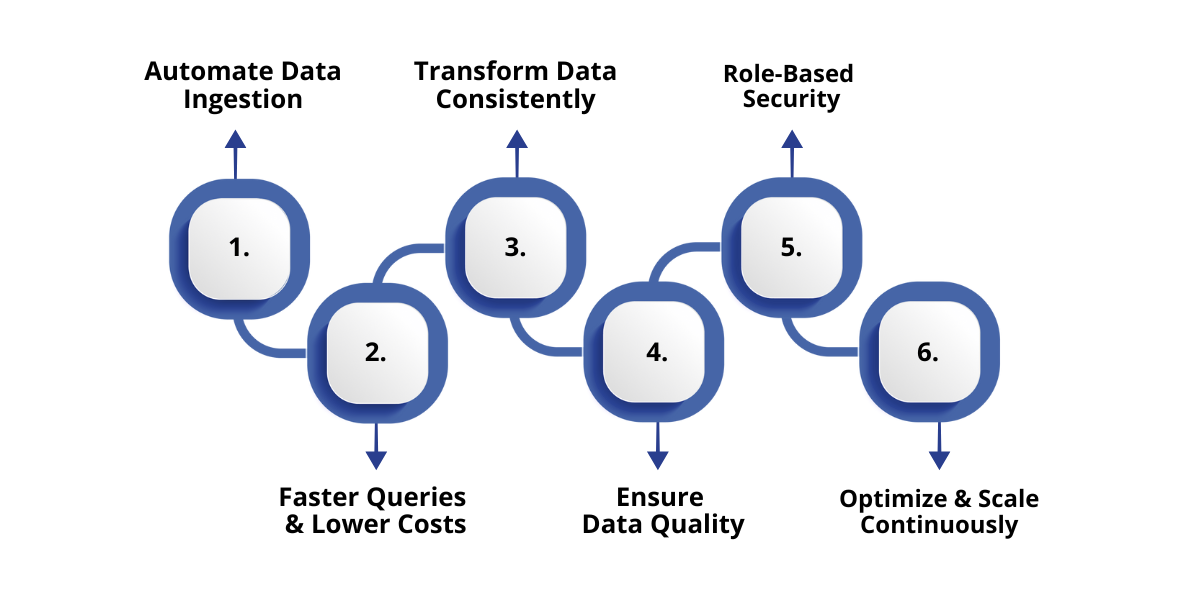

Best Practices for Data Transformation in Microsoft Fabric

A successful data transformation strategy ensures your organization can turn raw, fragmented data into actionable insights. Microsoft Fabric enables businesses to unify, clean, and prepare data at scale while supporting analytics, AI, and compliance.

Below are the key practices to implement.

1. Automate Data Ingestion and ETL/ELT Workflows

The foundation of effective data transformation is capturing the right data quickly, reliably, and consistently. Automating ingestion and ETL/ELT workflows reduces errors, accelerates processing, and ensures downstream analytics deliver accurate, actionable insights.

Consider these key points as well:

- Orchestrate Pipelines: Use Fabric Data Factory to schedule and automate both batch and streaming workloads, ensuring data is available exactly when teams need it.

- Use Low-Code Dataflows: Enable business users to clean, map, and transform operational data without relying on complex coding, increasing agility and adoption.

- Integrate Any Source: Connect SQL, NoSQL, SaaS platforms, APIs, and streaming systems, using schema mapping to standardize incoming data and simplify ingestion.

2. Optimize Storage for Faster Queries and Lower Costs

Organizations using edge processing achieve faster data handling and better operational efficiency, while modern storage practices can reduce infrastructure costs by up to 60%.

Key practices include:

- Partition Large Datasets: Break large datasets such as transaction logs into smaller chunks to enable parallel processing and faster analytics.

- Compact Small Files: Merge fragmented files to reduce query latency and speed up transformations.

- Use Columnar Storage: Apply Parquet in Lakehouse or Warehouse to compress data and accelerate query execution.

Also Read: Understanding the Benefits and Examples of Data Modernization Strategy

3. Standardize and Transform Data Consistently

By consistently structuring and transforming data, organizations can ensure their analytics deliver accurate insights that drive meaningful, actionable decisions. and aligned with business needs.

Some key features include:

- Implement T-SQL Logic: Apply standard transformation rules in Lakehouse or Warehouse to maintain compatibility with BI tools.

- Apply Incremental Loading: Process only new or updated data using watermarking or change data capture to save time and resources.

- Enforce Data Standards: Correct duplicates, null values, and inconsistent naming while maintaining a unified schema for a single source of truth.

Consistent data transformation creates dependable analytics and supports effective decision-making across the organization.

4. Ensure Data Quality and Reliability

High-quality data is the foundation of reliable dashboards, predictive models, and informed business decisions. Maintaining data integrity ensures analytics are trustworthy and actionable.

This strategy also includes:

- Automate Validation: Use Fabric dataflows to enforce schema and type checks automatically, preventing errors at the source.

- Cleanse Data Proactively: Fix duplicates, null values, and anomalies before the data reaches analytics tools.

- Track Errors and Lineage: Maintain audit logs to quickly identify and resolve issues while tracing data flow from origin to consumption.

5. Protect Data with Role-Based Security

Secure your sensitive data while empowering teams to access the insights they need to act fast and make informed decisions. Strong security measures protect compliance and strengthen trust across your organization.

Some more key points include:

- Assign Roles and Permissions: Control who can view, transform, or publish datasets to reduce exposure and prevent breaches.

- Use Dynamic Data Masking: Protect sensitive information such as personally identifiable data while keeping it usable for analytics.

- Integrate Governance: Use Microsoft Fabric with Purview to enforce policies, track compliance, and maintain consistent data security across your data estate.

Implementing role-based security keeps data protected and accessible, letting teams act confidently on accurate, governed information.

Also Read: Introduction to Microsoft Fabric for Small and Medium-Sized Enterprises

6. Monitor, Optimize, and Scale Continuously

The strength of analytics operations lies in continuous monitoring of data pipelines. A clear connection between oversight and performance ensures efficiency, maintains accuracy, and keeps operations high-performing, enabling teams to act on insights quickly and maintain reliable analytics.

To sum it up:

- Track Resource Usage: Use Fabric Capacity Metrics to monitor workloads, identify bottlenecks, and allocate resources effectively.

- Optimize Workflows Regularly: Tune queries, manage refresh schedules, and remove inefficiencies to maintain peak performance

- Set Alerts for Anomalies: Detect and resolve issues early to prevent disruptions and ensure uninterrupted access to critical insights.

Consistent monitoring of Microsoft Fabric's architecture and optimization guarantee that your analytics environment scales seamlessly while maintaining accuracy and timeliness.

Even with best practices in place, challenges arise. Let’s explore common hurdles and how to overcome them effectively.

Data Transformation Challenges and Solutions in Fabric

Even with a robust platform like Microsoft Fabric, organizations face hurdles during data transformation that can impact Microsoft Fabric’s analytics accuracy, speed, and reliability.

For example, a retail enterprise reduced processing delays by 40% after standardizing schemas and implementing incremental loading, improving real-time reporting for over 200 stores.

Some key challenges and ways to overcome them include:

1. Inconsistent Schemas

Different sources often use varying formats, leading to fragmented or inaccurate data.

Solution:

- Use schema mapping and enforce data standards to unify inputs.

- Apply automated validation rules to detect discrepancies early.

2. Large Datasets

Massive volumes of data can slow processing and raise storage costs.

Solution:

- Partition large datasets and use columnar storage formats like Parquet.

- Implement incremental loading to process only new or updated data, saving time and resources.

3. Latency in Processing

Real-time insights may be delayed if pipelines are inefficient.

Solution:

- Automate ETL/ELT workflows to accelerate data movement.

- Use edge processing for faster analytics close to the source.

By proactively addressing these challenges, organizations can ensure their data is accurate, actionable, and ready for decision-making.

Also Read: Setting Up and Using Copilot for Data Factory in Microsoft Fabric

Once challenges are managed, it’s essential to keep an eye on emerging trends shaping data transformation strategies.

Emerging Data Transformation Trends in Fabric for 2025

As data volumes increase rapidly, businesses need faster, smarter, and cost-efficient transformation strategies to stay competitive.

Key trends include:

These trends ensure your data transformation strategy delivers accurate, actionable insights and positions your organization for sustainable growth and innovation.

Also Read: Thinking of Working with a Microsoft Fabric Partner? Here’s What to Expect

Conclusion

Implementing best practices for data transformation in Microsoft Fabric ensures accurate, reliable, and actionable analytics.

By applying strategies such as automated ETL workflows, optimized storage, incremental loading, and consistent data standards, organizations can improve query performance, reduce errors, and streamline operations.

At WaferWire, we provide expert support throughout your Microsoft Fabric data transformation journey, from initial setup to ongoing management. We help standardize processes, maintain data quality, and ensure scalable, high-performing analytics.

Contact us today to implement Microsoft Fabric data transformation practices that maximize efficiency and deliver trustworthy insights.

FAQs

1. How can I ensure consistent and reliable data in Microsoft Fabric?

Implement standardized transformation rules, enforce schema consistency, and use incremental loading to process only new or updated data. This ensures clean, accurate, and analysis-ready datasets.

2. What are the best practices for optimizing storage in Microsoft Fabric?

Partition large datasets, merge small files, and use columnar storage formats like Parquet. These techniques reduce query latency, speed up transformations, and lower infrastructure costs.

3. How can I automate data transformation workflows?

Use Fabric Data Factory or low-code dataflows to schedule ETL/ELT pipelines. Automation reduces errors, accelerates processing, and ensures timely availability of transformed data.

4. How do I maintain data quality and reliability?

Use automated validation, proactive cleansing, and track data lineage to identify errors quickly. High-quality data ensures dashboards, AI models, and reports remain accurate and actionable.

5. How can I secure sensitive data during transformation?

Apply role-based access controls, dynamic data masking, and governance policies using Microsoft Fabric and Purview. This protects sensitive information while maintaining usability for analytics.

6. What tools can help monitor and optimize data transformation performance?

Track resource usage, query performance, and pipeline efficiency using Fabric monitoring tools. Continuous optimization ensures scalable, high-performing analytics operations.

Subscribe to Our Newsletter

Get instant updates in your email without missing any news

Copyright © 2025 WaferWire Cloud Technologies

.png)