Fabric AI Framework Overview and Integration on GitHub

Sai P

2025-07-09

Talk to our cloud experts

Subject tags

As the business world is getting increasingly driven by data significantly, the ability to unify data engineering, analytics, and Artificial Intelligence (AI) under one platform is a game-changer for organizations seeking to accelerate decision-making.

Microsoft Fabric is designed to do exactly that. As an end-to-end, SaaS-based analytics platform, Microsoft Fabric consolidates powerful capabilities, such as data movement, data science, real-time analytics, and Business Intelligence (BI), into a single unified experience.

At the heart of Fabric’s intelligence capabilities lies the Fabric AI Framework, a flexible and scalable toolkit that enables data professionals to embed Machine Learning (ML) and AI into their workflows. However, powerful frameworks need robust development workflows. That’s where GitHub integration becomes essential.

By connecting Fabric to GitHub, teams can manage code versions, collaborate across repositories, and automate deployments using Continuous Integration (CI)/Continuous Delivery (CD) pipelines. Let’s find out more about this fusion in this article.

To understand the value of this fusion, let’s first break down what the Fabric AI Framework offers.

What is the Fabric AI Framework?

The Fabric AI Framework is Microsoft Fabric’s integrated solution for embedding AI and ML directly into your data workflows. It offers a comprehensive set of tools and capabilities that enable organizations to build, train, deploy, and manage AI models across the entire data lifecycle, all within the unified Fabric environment.

The Fabric AI Framework bridges the gap between data engineering and intelligent analytics. It enables users to seamlessly integrate AI into their data pipelines, automate model training and scoring, and generate actionable insights, all while leveraging the scalability and governance features built into the Microsoft ecosystem.

To fully appreciate how Fabric AI works, let’s explore its key features and capabilities.

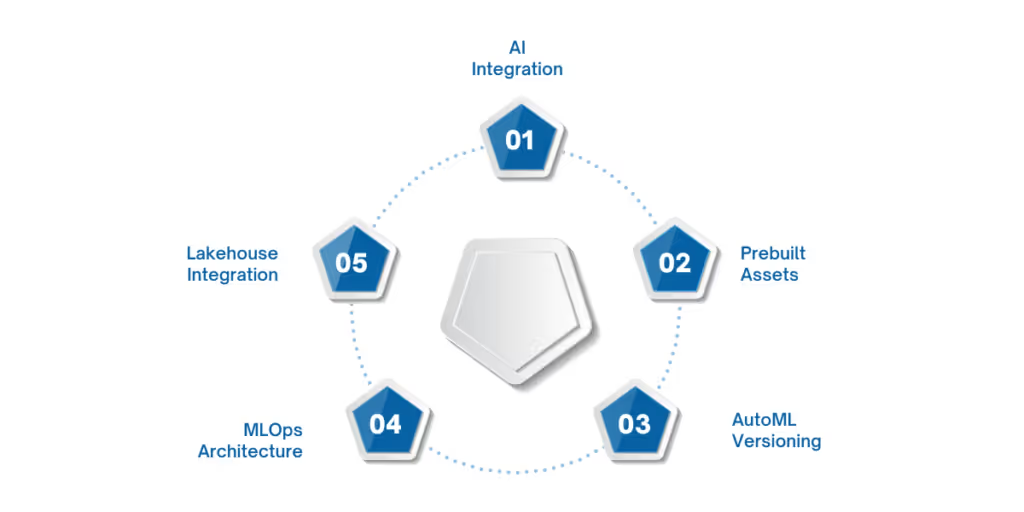

Key Features and Capabilities of Fabric AI Framework

The Fabric AI Framework is built to empower teams with powerful, production-grade AI tools that are deeply integrated with Microsoft’s modern data stack. Its features support everything from experimentation to deployment at scale, making it an ideal foundation for enterprise AI initiatives.

1. Native Support for AI Models in Pipelines

Fabric allows you to embed machine learning models directly into your data pipelines. Whether for batch or real-time scoring, models can be trained, evaluated, and deployed as part of the same workflow, minimizing context switching and speeding up iteration cycles.

2. Prebuilt ML/AI Components and Notebooks

To accelerate development, the framework offers a growing library of prebuilt components and reusable templates:

- Standardized feature engineering steps

- Model training and evaluation modules

- Sample notebooks for common use cases (e.g., classification, regression, NLP)

These resources help teams get started quickly without having to write boilerplate code from scratch.

3. AutoML Support and Model Versioning

Fabric includes AutoML capabilities, enabling users to automatically explore multiple algorithms, tune hyperparameters, and select the best-performing models. Once models are trained, they are versioned and stored within the Fabric workspace, making it easy to track performance over time, roll back changes, and promote models between environments.

4. MLOps-Friendly Architecture

The framework supports MLOps best practices by integrating with the following:

- GitHub for source control and collaboration

- CI/CD pipelines for model deployment and retraining

- Azure Machine Learning for model monitoring and governance

This architecture ensures that models transition smoothly from experimentation to production, maintaining traceability and compliance.

5. Integration with Lakehouse and OneLake

Fabric’s native Lakehouse architecture and OneLake storage system unify structured, unstructured, and streaming data. This provides AI models with immediate access to enterprise-wide data, eliminating duplication.

The result: simplified data access, faster training, and consistent data governance across analytics and AI workloads.

To understand how these features come together in practice, it’s essential to examine GitHub’s role in the overall architecture.

Also Read: Managing Microsoft Fabric Capacity and Licensing Plans

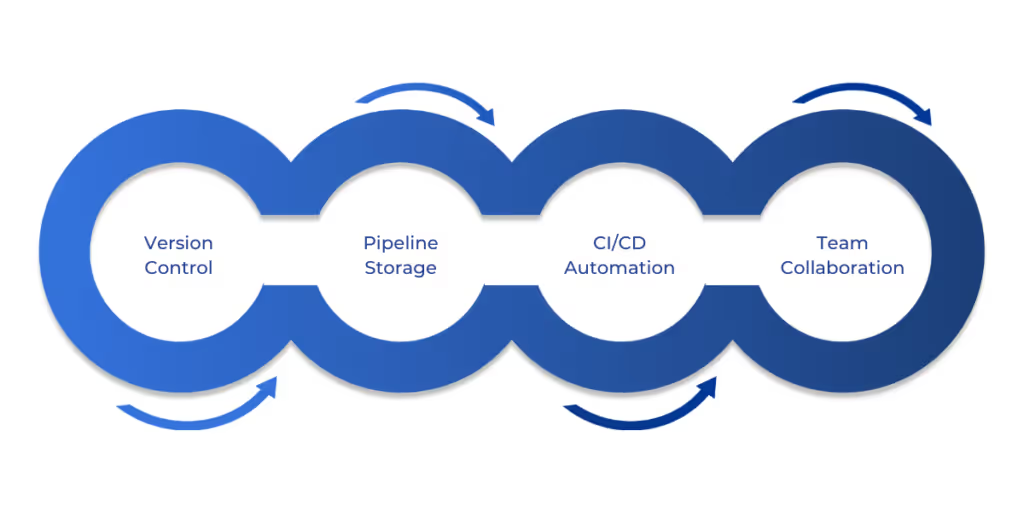

Role of GitHub in the Fabric AI Framework Architecture

GitHub plays a critical role in enabling collaborative development and robust DevOps practices within the Fabric AI Framework:

- Version Control: Track changes in notebooks, ML scripts, pipeline configurations, and YAML files. Use GitHub branches and pull requests to manage experimentation and code review.

- Pipeline Storage: Store data pipeline definitions and ML workflows as code to enable reproducibility and consistent deployment across environments.

- CI/CD Automation: Automate deployment of models, pipelines, and dashboards using GitHub Actions or Azure DevOps. Example: Trigger model retraining and redeployment automatically when a new dataset is ingested, or a notebook is updated.

- Team Collaboration: GitHub repositories enable cross-functional teams, data engineers, scientists, and analysts to collaborate in a centralized environment with transparency and accountability.

So, how do you connect these systems in practice? Let’s walk through the integration process.

Step-by-Step Guide to Setting and Managing GitHub Integration

Integrating Microsoft Fabric with GitHub enables a seamless development lifecycle, facilitating version control, team collaboration, and automated deployment for AI and analytics projects.

Below is a step-by-step guide to setting up and managing GitHub integration effectively.

Step 1: Set Up Your GitHub Repository

- Create a new repository on GitHub (public or private).

- Organize folders for:

- /notebooks/: Jupyter or Spark notebooks

- /pipelines/: YAML pipeline definitions

- /models/: Serialized models or metadata

- /scripts/: Python, SQL, or Spark scripts

Step 2: Link GitHub Repo to Fabric Workspace

- In Fabric, navigate to your workspace > Git Integration.

- Choose GitHub as the provider.

- Authenticate using OAuth or a Personal Access Token (PAT) with at least repo, workflow, and read:org permissions.

- Select the target GitHub organization and repository.

- Choose a default branch (e.g., main or dev).

Step 3: Configure Sync Settings

- Enable two-way sync for notebooks and pipeline artifacts.

- Define commit message templates (optional).

- Set file format preferences (e.g., .ipynb or .py for notebooks).

Step 4: CI/CD Setup with GitHub Actions or Azure DevOps

You can automate testing, training, and deployment with either GitHub Actions or Azure DevOps:

GitHub Actions: Sample Workflow

name: Fabric AI CI/CD

on:

push:

branches: [ main ]

jobs:

deploy:

runs-on: ubuntu-latest

steps:

- name: Checkout Code

uses: actions/checkout@v3

- name: Deploy Pipeline to Fabric

run: |

az login --service-principal -u ${{ secrets.CLIENT_ID }} -p ${{ secrets.CLIENT_SECRET }} --tenant ${{ secrets.TENANT_ID }}

az fabric pipeline deploy --name "CustomerChurnPipeline" --file pipelines/churn_pipeline.yml

Azure DevOps:

Use a pipeline YAML file with steps to:

- Pull from GitHub

- Validate ML code/scripts

- Deploy notebooks and models to Fabric

Connect Azure DevOps to Fabric via service principal and API integration.

Step 5: Syncing Notebooks, Pipelines, and Code

Once the integration is active:

- Fabric automatically syncs edited notebooks back to GitHub on save.

- Changes made in GitHub (via pull request or direct commit) are reflected in Fabric after re-sync.

- Use commit messages to log model versions or pipeline revisions.

You can also tag stable versions using Git tags (eg., v1.0.0, v2.1.1), which helps with rollbacks and release management.

Once integration is in place, you’ll want to understand how to structure and manage your AI projects for maximum efficiency. That’s where best practices come into play.

Also Read: Get Started with Data Science in Microsoft Fabric

Best Practices for Fabric AI Framework

To ensure scalability, maintainability, and security in your AI projects built on Microsoft Fabric and GitHub, it’s essential to follow structured development and DevOps principles. Below are recommended best practices across architecture, security, and operational management.

1. Folder Structure for Fabric Projects on GitHub

Organizing your repository with a standardized structure enhances collaboration and facilitates team onboarding more efficiently. Here’s a suggested layout: Prefix files with numbers or labels (01_eda.ipynb, 02_train_model.ipynb) to suggest execution order.

2. Using Environment Variables and Secrets Securely

Avoid hardcoding credentials, API keys, or workspace identifiers in your code or YAML files.

- Store secrets in GitHub Secrets (Settings > Secrets and variables) and reference them using ${{ secrets.MY_SECRET }}.

- For Azure-specific authentication:

- Use a Service Principal and store its client_id, tenant_id, and client_secret in GitHub secrets.

- Prefer using az login with service principal creds during CI/CD workflows.

- For runtime environments (e.g., model parameters, environment flags), use a centralized config file or .env file managed securely.

3. Reusability and Modular Pipeline Design

Build your pipelines and models in a modular fashion to encourage reuse and reduce duplication:

- Split large notebooks into reusable chunks:

- data_preparation.ipynb

- feature_engineering.ipynb

- train_model.ipynb

- Store reusable transformation scripts (e.g., one-hot encoding, null handling) in /scripts/ and import them where needed.

- Parameterize pipeline YAML files using configs/ so they can run in dev, test, or prod environments with minimal changes.

- Use notebook parameters or command-line flags to control runtime behavior.

4. Monitoring and Model Governance

Operationalizing AI responsibly means tracking performance, lineage, and access. Fabric and Azure provide built-in tools to help.

- Model Registry (via Azure ML):

- Track model versions, associated datasets, and metrics

- Log metadata: model accuracy, date, creator, environment

- Monitoring and Drift Detection:

- Set up alerts when model performance drops below a threshold

- Use Power BI or Application Insights for logging metrics in production

- Audit and Access Control:

- Implement role-based access at Fabric workspace and GitHub levels

- Enable audit logs to track code changes, retraining events, and access history

- Compliance:

- Maintain documentation of data sources, feature logic, and model fairness assessments

- Use GitHub issues or project boards for model review and risk sign-offs

Even with best practices, though, technical issues can still arise. Let’s look at how to troubleshoot the most common ones.

Troubleshooting & Common Issues

While Microsoft Fabric and GitHub integration streamlines AI development, certain technical hiccups can slow you down. Here’s a breakdown of frequent issues and how to resolve them quickly.

1. GitHub Sync Errors and Resolutions

Issue: Notebooks or pipeline files not syncing between Fabric and GitHub.

Resolution:

- Check repository permissions: Ensure Fabric has write access to the selected branch.

- Verify branch name: Fabric syncs only with the specified branch. Mismatched branch names can silently fail.

- Manual sync trigger: In Fabric, go to the Git Integration panel > click "Sync now" to force a refresh.

- File naming conflicts: Avoid using unsupported characters or file types (.ipynb or .py for notebooks, .yml or .json for pipelines only).

2. Permission and Authentication Pitfalls

Issue: Authentication fails when connecting Fabric to GitHub or deploying via GitHub Actions.

Resolution:

- Personal Access Token (PAT):

- Must include at least: repo, workflow, read:org

- Regenerate PAT if expired and update it in Fabric/GitHub Secrets.

- Service Principal Errors (CI/CD):

- Ensure CLIENT_ID, TENANT_ID, and CLIENT_SECRET are valid and not expired.

- The service principal must have access to Fabric via Azure roles (e.g., Contributor).

Issue: 403 or 404 errors when pushing or pulling from GitHub.

Resolution:

- Double-check OAuth scopes or PAT permissions.

- Confirm that repository visibility matches the credentials used (public/private).

- Enable GitHub API access in organizational settings if you're using GitHub Enterprise.

3. CI/CD Configuration Mismatches

Issue: GitHub Action runs but fails during deployment step.

Resolution:

- Missing CLI tool: Ensure your runner installs az CLI or Fabric-specific extensions.

- Incorrect workspace name: Fabric CLI expects exact workspace names. Misspellings cause silent failures.

- Incorrect paths: Use relative paths (e.g., pipelines/churn_score.yml) and ensure those files are committed to the repo.

- Debugging tip: Add --verbose flags or log each command step to capture errors more clearly.

Issue: Environment variables not working in GitHub Actions.

Resolution:

- Ensure your secrets are set in Repository Settings > Secrets and Variables > Actions.

- Reference them correctly: ${{ secrets.MY_SECRET_NAME }}.

- For shared workflows, consider using Organization-level secrets.

Also Read: Fabric Data Integration Best Practices Guide

Conclusion

Microsoft Fabric is rapidly transforming the way organizations build, scale, and operationalize AI projects, bringing together data engineering, ML, and analytics into a unified platform. When combined with GitHub’s powerful version control, collaboration, and CI/CD capabilities, Fabric becomes a true end-to-end environment for AI innovation.

From orchestrating data pipelines and training models to visualizing insights and deploying workflows, the Fabric AI Framework empowers data teams to work faster and smarter, while ensuring code quality, reproducibility, and governance through seamless GitHub integration.

Ready to streamline your AI workflows and unlock the true power of GitHub-integrated Fabric pipelines?

At WaferWire, we specialize in helping businesses like yours implement, optimize, and scale AI solutions using Microsoft Fabric and GitHub CI/CD best practices. From setting up secure development environments to automating deployment pipelines, our experts ensure your data and AI initiatives are robust, compliant, and future-ready.

Contact WaferWire today for a personalized consultation and discover how we can accelerate your journey to operational AI with Microsoft Fabric and GitHub integration.

Subscribe to Our Newsletter

Get instant updates in your email without missing any news

Copyright © 2025 WaferWire Cloud Technologies

.png)