Change Data Capture in Microsoft Fabric Explained

Murthy

5th Aug 2025

Talk to our cloud experts

Subject tags

Data never stops moving, and neither do the systems that rely on it. But keeping analytics up to date with real-time changes is a challenge when you're dealing with static batch jobs and fragmented pipelines. That’s where Change Data Capture (CDC) steps in.

CDC isn’t just another integration feature. It’s a foundational capability for streaming insights, live dashboards, and responsive data platforms. And with Microsoft Fabric introducing native CDC support, teams can now sync changes from operational systems to analytics workloads with precision, speed, and scale.

This blog breaks down how Microsoft Fabric handles CDC, the benefits it unlocks, and how to implement it effectively to power a modern, responsive data strategy.

Key Takeaways

- Change Data Capture (CDC) tracks and replicates real-time changes from source systems to downstream platforms.

- Microsoft Fabric supports native CDC, enabling near real-time data movement into OneLake and Data Factory.

- Common CDC types include log-based, trigger-based, and timestamp-based methods.

- CDC reduces latency, improves data freshness, and supports event-driven architectures.

- Choosing the right CDC tool depends on latency, reliability, scalability, and operational complexity.

- WaferWire helps organizations implement CDC in Microsoft Fabric for high-performance data delivery.

What is Change Data Capture (CDC)?

Change Data Capture (CDC) is the real-time answer to a problem that’s plagued data teams for years: how to keep systems aligned without constantly reprocessing entire datasets.

At its core, CDC monitors a database for insert, update, and delete operations, then captures only those changes and pushes them downstream. That means no more waiting on bulky batch jobs or working with stale snapshots.

This isn’t just about data movement. It’s about precision, speed, and operational efficiency. With CDC, every update to a record can be reflected in your reporting tools, cloud storage, or machine learning pipelines almost immediately, without hammering your source systems.

CDC is now the backbone of modern data architectures. It powers everything from zero-downtime cloud migrations to real-time analytics and continuous lakehouse feeds. If you're building anything that depends on live, accurate data, CDC isn’t optional; it’s essential.

How Does Change Data Capture Work?

Change Data Capture works by watching your data like a hawk, tracking every insert, update, or delete as it happens and streaming those changes downstream in real time. While the idea is simple, the mechanics behind it are anything but.

Each time a transaction occurs, CDC taps into the database to catch the change. This can be done in several ways:

- Polling tables for timestamp differences

- Triggering functions at the row level

- Or reading directly from the database’s transaction log

Each approach has trade-offs. Timestamp-based CDC might be easier to implement but can miss edge cases. Triggers offer precision but can strain performance. Transaction log readers are more efficient but require deeper database access and permissions. The choice affects everything, from system load to latency to how soon your analytics reflect reality.

And the magic doesn’t stop at capture.

Once changes are detected, modern CDC systems don’t just forward raw data, they stream events to destinations like Azure Data Lake, Snowflake, Databricks, or other lakehouses in Fabric. Along the way, they can clean, transform, and enrich the data in-flight. So instead of shipping noise, you’re delivering ready-to-query, business-ready datasets, sometimes with sub-second latency.

That means your dashboards light up with live data. Your models retrain faster. And your decisions are based on what’s happening now, not hours ago.

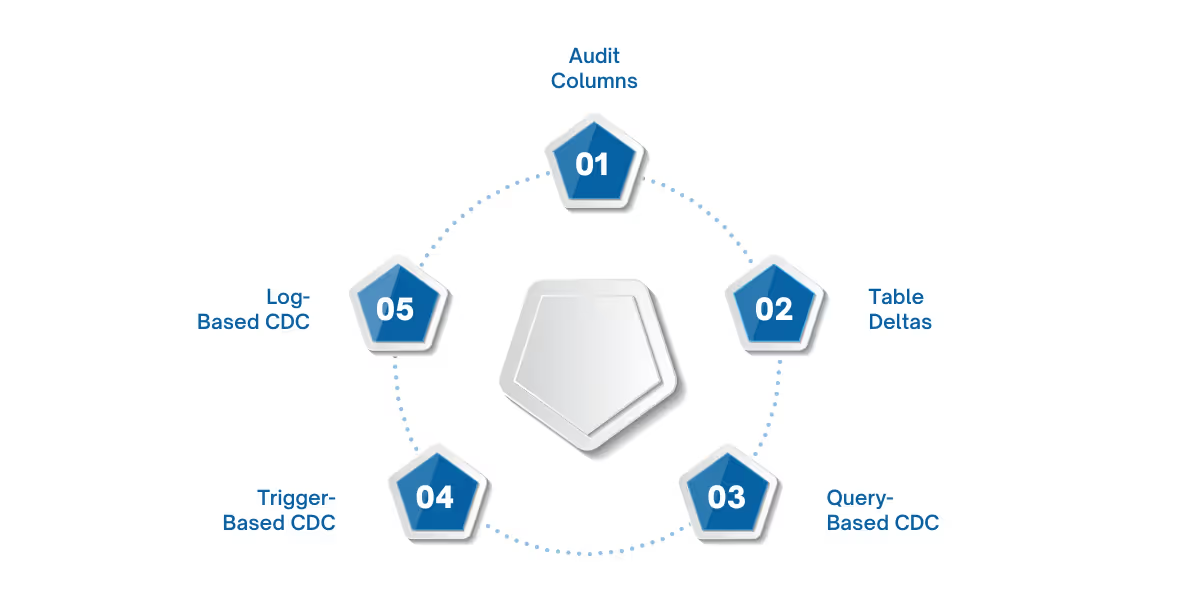

Types of CDC Methods

CDC isn’t a one-size-fits-all implementation. The way you capture change depends heavily on your infrastructure, data volume, performance requirements, and tolerance for complexity. Each method comes with trade-offs, knowing them helps you choose the right fit for your data architecture.

1. Audit Columns

This is the most straightforward CDC method. It uses “LAST_UPDATED” or “DATE_MODIFIED” columns, either existing ones or those you add yourself, to track changes.

Here’s how it works:

- Pull the latest timestamp from the destination table.

- Fetch records from the source with a more recent Created_Time or Updated_Time.

- Insert or update the destination accordingly.

Pros:

- Easy to implement at the application level.

- No external tools required.

Cons:

- Can’t track deletes without additional scripting.

- Requires scanning tables, which increases CPU usage.

- Vulnerable to inconsistencies if timestamp logic isn’t applied consistently across all tables.

Bottom line: audit columns are great for lightweight use cases but tend to break at scale.

2. Table Deltas

The delta approach compares snapshots of source and destination tables to identify what’s changed. SQL scripts or utilities like tablediff do the heavy lifting.

Pros:

- Can be implemented using standard SQL.

- Detects inserts, updates, and deletes accurately.

Cons:

- Requires maintaining three full data copies: current, previous, and target.

- Doesn’t scale well under high transaction volumes.

- Latency is high, and resource costs climb fast with data volume.

This method is best reserved for low-frequency jobs where precision is more important than speed.

3. Query-Based CDC (Polling)

Query-based CDC relies on repeatedly scanning the source database for new or changed rows using timestamp columns.

Pros:

- Easy to set up.

- No changes to source system architecture.

Cons:

- High query overhead.

- Misses fast updates between polling intervals.

- Cannot capture deletes.

It’s rarely used in serious production workloads because the inefficiencies pile up quickly.

4. Trigger-Based CDC

Database triggers capture changes by firing procedures on INSERT, UPDATE, or DELETE events and writing those changes to a separate changelog table.

Pros:

- Captures all CRUD operations.

- Doesn’t miss real-time changes.

Cons:

- Adds compute overhead to every transaction.

- Difficult to maintain across schema changes.

- Tight coupling between logic and application makes scaling painful.

Triggers are precise, but they come at a cost: performance and complexity.

5. Log-Based CDC

Log-based CDC is the enterprise standard. It taps directly into the database’s native transaction logs (like Oracle redo logs or SQL Server’s transaction logs) to read change events in real time.

Pros:

- Zero impact on production queries.

- Handles massive data volumes with sub-second latency.

- Reliable and ordered by default.

Cons:

- Requires log-reading permissions and tooling support.

- May need configuration changes depending on your DBMS.

Log-based CDC is purpose-built for real-time, high-throughput environments. Tools like Striim and Debezium rely on this method for good reason, it scales effortlessly and stays invisible to your transactional systems.

Benefits of Using CDC

Implementing Change Data Capture (CDC) isn’t just a technical upgrade, it’s a strategic advantage. By rethinking how data moves through your ecosystem, CDC delivers speed, precision, and performance at scale. Here’s how:

Timeliness: Act on Data the Moment It Changes

CDC enables near real-time data replication, ensuring decisions are made with the freshest insights possible. That’s a game-changer for businesses where timing is critical.

- Financial services can detect fraud as transactions occur.

- Retailers can sync inventory levels across systems instantly.

- Logistics teams can adjust routes dynamically based on live data.

Instead of reacting hours later, you act in the moment.

Efficiency: Minimize Load on Critical Systems

Traditional batch ETL processes pull entire datasets repeatedly, straining your databases and wasting compute. CDC optimizes this by capturing only the changes.

- Reads from transaction logs without querying entire tables.

- Low impact on production workloads.

- Frees up system resources for operational performance.

That means faster pipelines, without sacrificing your application performance.

Reliability: Accurate, Consistent, and Trustworthy

Data integrity is non-negotiable. CDC guarantees that every change is captured and processed in the correct order, preserving consistency across systems.

- Maintains transactional order and integrity.

- Reduces risk of data loss or duplication.

- Supports error handling and exactly-once delivery in advanced CDC platforms.

This reliability makes it ideal for financial reporting, compliance, and regulatory use cases.

Scalability: Ready for Modern Data Architectures

Modern data platforms demand more than just speed, they need resilience. CDC fits seamlessly into event-driven and microservices-based ecosystems.

- Supports high-throughput, distributed processing.

- Scales horizontally with your data volume and business growth.

- Ideal for cloud-native and hybrid data architectures.

You get robust pipelines that evolve with your infrastructure, without becoming brittle.

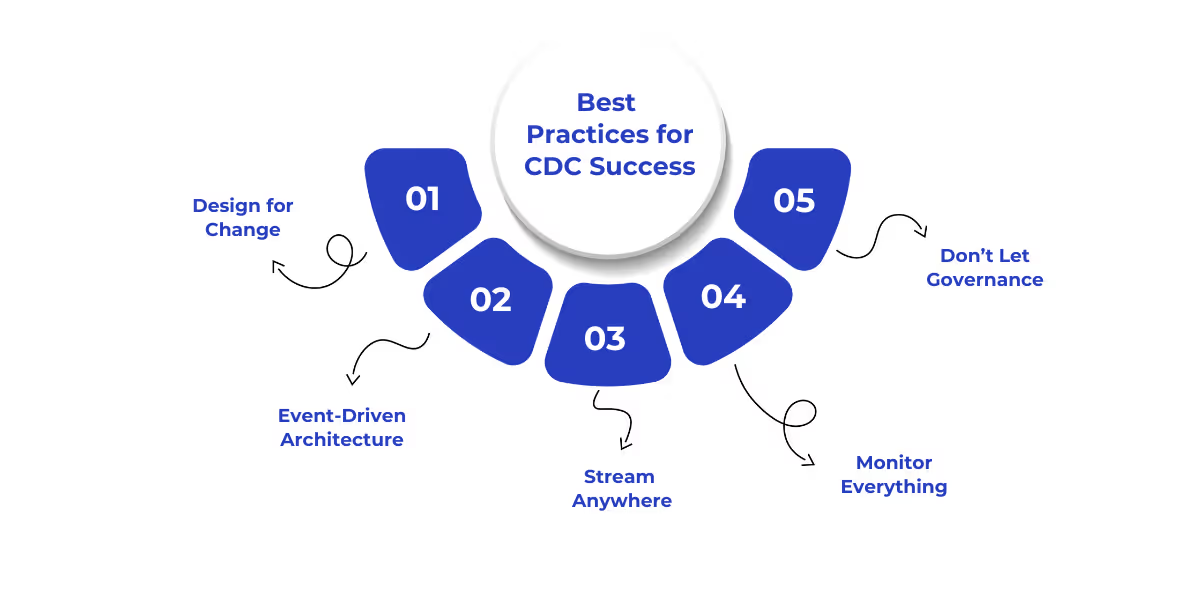

Best Practices for CDC Success

Turning on Change Data Capture is easy. Making it reliable at scale? That takes strategy. Microsoft Fabric CDC enables powerful real-time data movement, but brittle pipelines, silent failures, and schema drift can quickly derail the benefits if not handled proactively.

Here’s how to build CDC pipelines that last:

1. Design for Change, Not Stability

Source systems change, frequently. New columns show up, data types evolve, and tables disappear. Hardcoded pipelines break when assumptions shift. Instead, assume drift is inevitable. Use tools or registries that detect schema changes automatically and adapt downstream targets accordingly. Microsoft Fabric’s integration with tools like Azure Data Factory or third-party connectors can help propagate changes with minimal manual effort.

CDC success means designing with flexibility, not fragility.

2. Build for Scale with Event-Driven Architecture

Real-time performance doesn’t mean monolithic design. Loosely coupled, microservices-style pipelines give you the agility to scale each component independently. Insert a buffer like Azure Event Hubs or Kafka between source and destination to decouple workloads and prevent downstream bottlenecks.

The goal? High-throughput, fault-tolerant streams that don’t overload your operational systems.

3. Read Once, Stream Anywhere

One change stream. Multiple consumers. That’s the pattern to aim for.

Instead of spinning up separate CDC jobs for every consumer, BI teams, ML pipelines, dashboards, capture the data changes once and fan them out to every target. This pattern not only minimizes source load but also centralizes processing and reduces redundant work. Think of it as a publish-subscribe model for your entire data estate.

4. Monitor Everything, Validate Often

What good is a pipeline if you can’t see inside it? Continuous observability is a must. Use tools that give visibility into throughput, latency, and system health, ideally with built-in anomaly detection and validation checks. Microsoft Fabric integrates with monitoring tools like Azure Monitor and Log Analytics to help surface issues before they cascade downstream.

Trustworthy data starts with transparent systems.

5. Don’t Let Governance Be an Afterthought

Security, auditability, and data quality aren’t optional, they’re foundational. Apply fine-grained access controls to change streams. Encrypt data at rest and in transit. Log every data event for traceability. Embed data quality checks directly in your pipelines to catch issues in-flight, not after the fact.

Your CDC architecture should align with your broader data governance strategy. Microsoft Fabric’s native support for Azure Purview and access controls makes this achievable from day one.

Key Use Cases for Change Data Capture

Change Data Capture (CDC) unlocks real-time data movement across critical business systems. Here are some high-impact use cases:

- Real-Time Analytics: CDC feeds live data into BI tools and dashboards, allowing teams to track KPIs, spot trends, and respond faster, without waiting on batch jobs.

- Cloud Migration & Hybrid Sync: CDC enables zero-downtime migrations by syncing on-prem and cloud databases in real time. It supports hybrid architectures with continuous data replication.

- Lakehouse Hydration: Modern data lakehouses thrive on current data. CDC streams fresh transactions directly into platforms like Microsoft Fabric, keeping analytics both timely and efficient.

- Operational Intelligence & AI: Low-latency CDC data can trigger intelligent workflows, like fraud detection or personalized recommendations, by powering real-time AI/ML models.

Choosing the Right CDC Tool

Navigating the CDC vendor landscape can be daunting, solutions range from lightweight frameworks to full-fledged cloud platforms. For enterprise-grade workloads, it's essential to assess these options based on latency, reliability, scalability, and ease of management.

At WaferWire, we help clients align their data architecture with operational goals. Rather than pushing a one-size-fits-all solution, we evaluate each use case against key criteria:

- Log-based capture vs. batch-based synergies – Ensuring true real-time replication without overloading source systems.

- Production-grade resilience – Alerting, monitoring, and rollback features built-in.

- Scalability & extensibility – Tools should handle growth in data volume without architectural overhaul.

- DevOps-ready interfaces – Ease of deployment, version control, and observability simplified.

Here’s how CDC solutions typically line up:

Rather than promoting a single option, WaferWire collaborates with your team to:

- Profile workloads – Define SLA and throughput requirements.

- Match tool to need – Choose between lightweight frameworks for agile use cases and robust platforms for real-time operations.

- Validate performance – Run tests in proof-of-concept environments.

- Integrate with Fabric – Configure CDC streams into Microsoft Fabric’s OneLake or Data Factory pipelines, ensuring smooth downstream adoption.

For example, we worked with a large retailer to implement log-based CDC into Microsoft Fabric, using CDC in Copy Job to feed operational changes into streaming dashboards. That solution delivered sub-minute refresh rates with minimal overhead

Conclusion

Change Data Capture in Microsoft Fabric unlocks real-time analytics and smarter operations, but only when implemented with the right strategy and tools. Success depends on more than just enabling CDC; it requires thoughtful integration, monitoring, and performance alignment.

WaferWire helps you get it right.

Our experts design tailored CDC solutions within Microsoft Fabric to ensure low-latency data sync, high reliability, and future-ready scalability.

Start your CDC journey with confidence.

Talk to WaferWire today to power your next-gen data platform.

FAQs

1. What is Change Data Capture (CDC) in Microsoft Fabric?

CDC in Microsoft Fabric enables tracking and syncing of changes (INSERTs, UPDATEs, DELETEs) from source databases to downstream data systems in near real-time.

2. Is CDC built-in to Microsoft Fabric?

Yes, Fabric supports CDC natively through Copy Data Jobs in Data Factory, allowing efficient data ingestion from supported sources.

3. What’s the difference between log-based and trigger-based CDC?

Log-based CDC reads changes directly from database logs (low overhead), while trigger-based CDC uses database triggers (easier setup, but can add latency).

4. Can I use CDC for both operational and analytical use cases?

Absolutely. CDC is ideal for real-time reporting, streaming analytics, replication, and microservice integration.

5. How can WaferWire help with Microsoft Fabric CDC?

WaferWire offers end-to-end support—from choosing the right CDC method to implementing, monitoring, and scaling it within Microsoft Fabric environments.

Subscribe to Our Newsletter

Get instant updates in your email without missing any news

Copyright © 2025 WaferWire Cloud Technologies

.png)