Understanding Metadata in Microsoft Fabric Data Pipelines

Sai P

17th Sept 2025

Talk to our cloud experts

Subject tags

Is your data pipeline slowing you down?

Making decisions quickly and with confidence hinges on how well you manage and utilize your data. But with growing volumes of data from multiple sources, maintaining efficiency can feel like trying to juggle too many things at once.

By leveraging metadata, you can transform how your data flows, making it not only more organized but also more powerful. Metadata is what allows you to manage, automate, and scale data processes across your organization.

In this blog, we’ll break down how metadata in Microsoft Fabric can supercharge your data pipelines, making them more agile, accurate, and efficient.

Key Takeaways

- Metadata-driven pipelines in Microsoft Fabric offer businesses a streamlined approach to managing and automating their data workflows, ensuring better performance and scalability.

- Metadata plays a vital role in data governance by ensuring consistency, security, and transparency across data pipelines.

- Data lineage tracking, facilitated by metadata, helps organizations maintain full visibility into their data’s journey, which is essential for auditing and compliance.

- With metadata-based automation, businesses can significantly reduce manual intervention, accelerate processes, and improve data quality.

- Using metadata in data pipelines enhances collaboration and efficiency, allowing teams to work with real-time, consistent data, optimizing decision-making.

What is Metadata in Microsoft Fabric?

Metadata, in simple terms, refers to the data about the data. In the context of Microsoft Fabric, it’s the foundational information that helps define the structure, usage, and behavior of data within a data pipeline.

It governs how data is processed, ensures it’s secure and compliant, and helps automate many of the tasks that would otherwise require manual intervention. This allows businesses to manage their data pipelines more efficiently and scale their operations without losing control over their data quality.

The Added Value of Metadata in Microsoft Fabric

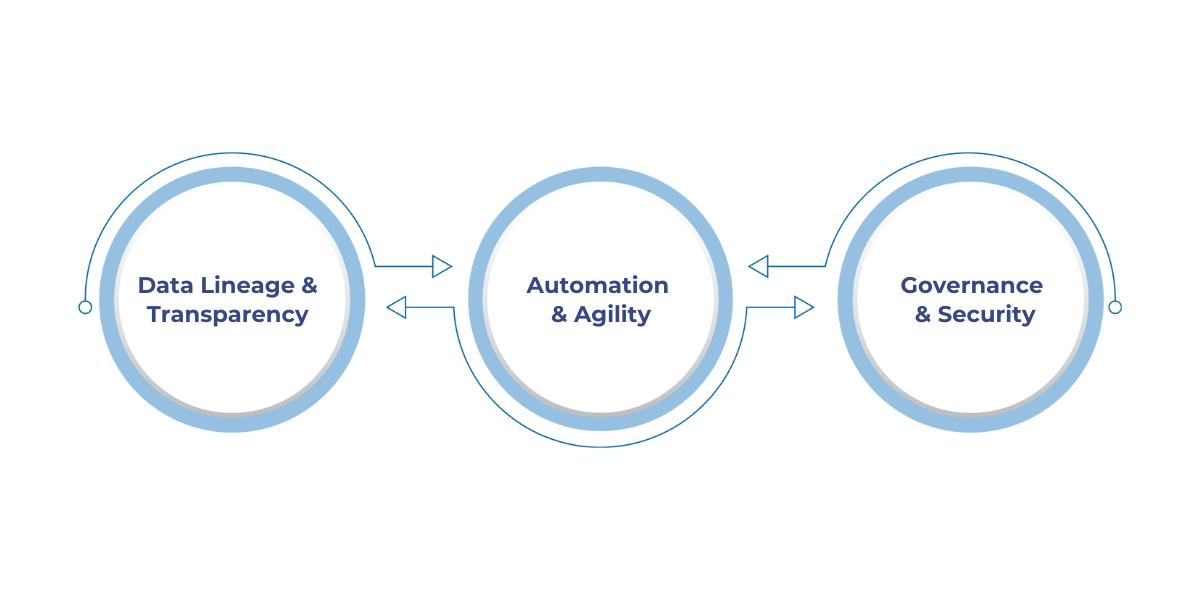

Fabric metadata ensures data integrity and governance, while enhancing transparency and decision-making:

- Data Lineage & Transparency: Operational metadata tracks data flow, aiding in compliance, auditing, and bottleneck identification.

- Automation & Agility: Automates tasks like validation, transformation, and reporting, reducing errors and boosting speed.

- Governance & Security: Metadata enables better security by tagging data for encryption and access control, ensuring compliance and privacy.

By setting the foundation with metadata, businesses can now move into how this metadata plays a crucial role within data pipelines. Understanding the functionality of metadata in these pipelines is the next step to fully appreciating its value.

The Role of Metadata in Data Pipelines

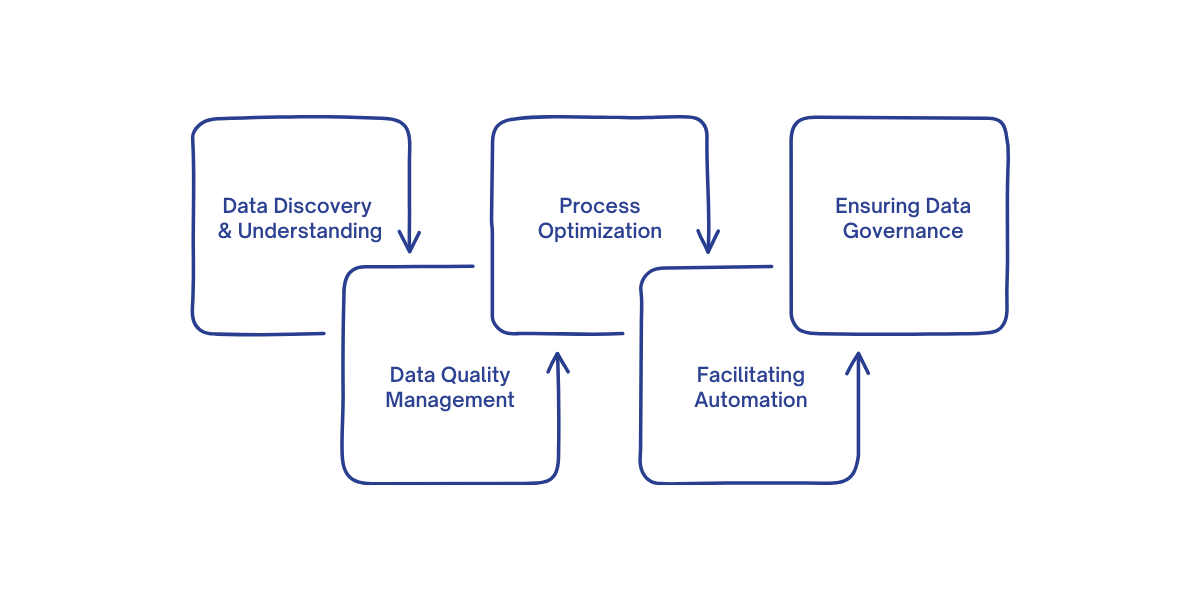

In Microsoft Fabric, metadata is crucial in managing and processing data. It enhances how data flows, ensuring efficiency, compliance, and optimization in data pipelines. Here's how:

- Data Discovery and Understanding: Metadata helps users quickly identify and understand data. It catalogs and indexes data, enabling fast access and simplifying data retrieval. Data lineage shows how data flows, supporting transparency and compliance.

- Data Quality Management: Metadata automates data validation, ensuring it meets predefined quality standards before processing. It also tracks errors back to their source, enabling quick resolution and minimizing downtime.

- Process Optimization: Metadata informs data transformation processes and helps monitor pipeline performance. It ensures efficient data flow and scalable operations by optimizing the transformation logic and identifying bottlenecks.

- Facilitating Automation and Scalability: Metadata allows dynamic adjustments to new data sources, reducing manual work. It also future-proofs data pipelines, making it easier to integrate new technologies like AI or machine learning without major redesigns.

- Ensuring Data Governance and Compliance: Metadata aids in data classification and access control, ensuring compliance with standards like GDPR or HIPAA. It also supports audit trails, providing traceability and ensuring regulatory adherence.

With the proper governance mechanisms in place, metadata ensures that data is not only accessible but also secure, compliant, and auditable.

Suggested read: Fundamentals and Best Practices of Metadata Management

Now that we understand how metadata supports data flow and ensures quality, let's dive deeper into the key components of metadata-driven pipelines. This will help clarify how these elements work together to optimize data operations.

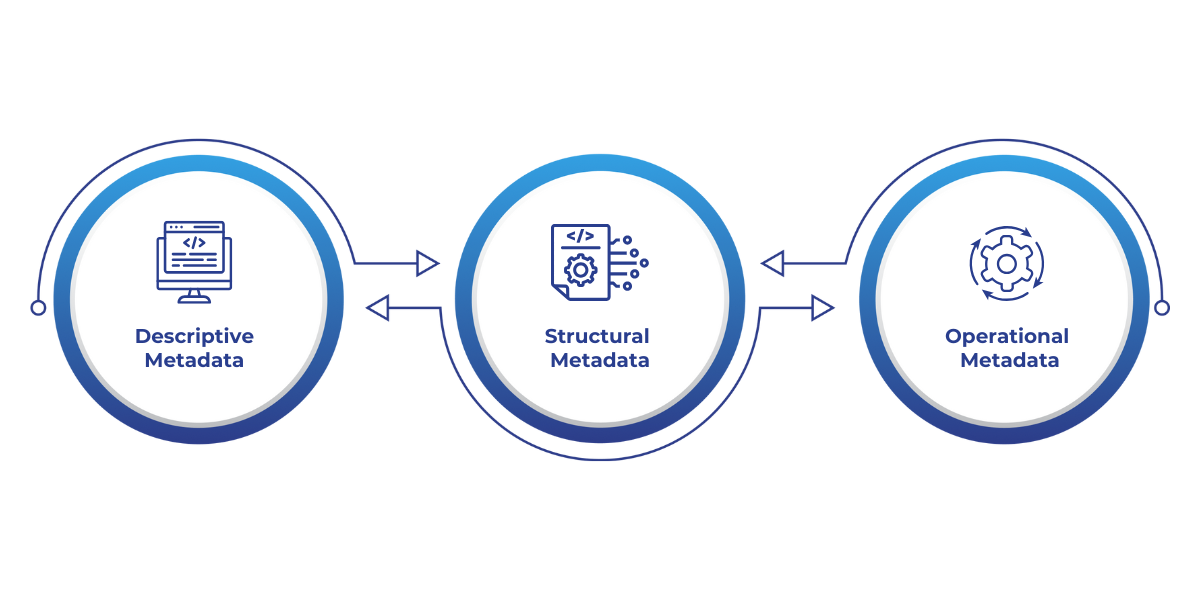

Types of Metadata in Microsoft Fabric

There are three main categories of metadata in Microsoft Fabric: Descriptive, Structural, and Operational metadata. Each type has its own unique function, contributing to the seamless management and execution of data pipelines.

1. Descriptive Metadata

Descriptive metadata is the most straightforward type. It provides basic information about the data, making it easier to understand and use. This metadata describes what the data is, helping users quickly identify, classify, and organize it.

- Column Names: Metadata that defines the name and type of each column in a dataset, allowing users to understand the structure of tables or datasets.

- Data Types: Information about the type of data stored (e.g., integers, floats, strings), which is crucial for data transformation and compatibility.

- Data Definitions: Includes descriptions or labels of what each data element represents. For example, “CustomerID” could be a unique identifier for a customer, while "OrderAmount" might be the total value of a customer’s purchase.

Descriptive metadata is crucial for data discovery and accessibility. It helps analysts, data engineers, and other stakeholders quickly locate and understand the data they’re working with.

2. Structural Metadata

Structural metadata defines the organization and relationships of data within databases or other data systems. This type of metadata outlines how data is stored, linked, and formatted across different platforms, enabling better data organization and interaction.

- Table Schema: Metadata that details the structure of a table or dataset, including column names, data types, and constraints. This is critical for creating relationships between data elements and ensuring consistency across datasets.

- Data Relationships: Defines how different pieces of data are related. For instance, a foreign key in a table that links to another table is part of the structural metadata.

- Indexing and Partitioning: Metadata that specifies how data is indexed or partitioned within a database. This is essential for ensuring that data retrieval is fast and efficient.

Structural metadata is key to data modeling, ensuring that data flows correctly through different processes while maintaining integrity and accessibility.

3. Operational Metadata

Operational metadata provides information about the data's lifecycle and the operations performed on it. This type of metadata is critical for understanding the history, usage, and transformations that data has gone through within the pipeline.

- Data Lineage: One of the most important aspects of operational metadata, lineage tracks the journey of data from its origin to its final destination. It shows how data has been transformed, where it came from, and how it is used in the pipeline.

- Processing Details: Metadata that records when data was processed, who processed it, and what changes were made. This is essential for auditing and tracking changes.

- Data Update History: Information about when and how often data is updated, including details about modifications or transformations. This helps businesses understand how frequently their data changes and whether any adjustments are needed to maintain its accuracy.

Operational metadata is essential for data governance, ensuring that data transformations and movements are well-documented, traceable, and compliant with internal or external regulations.

Also read: Top Metadata Management Tools and Their Features

Having laid the groundwork for the structure of metadata-driven pipelines, the next step is to look at the practical steps to implement them. This approach will help you leverage these principles in real-life scenarios.

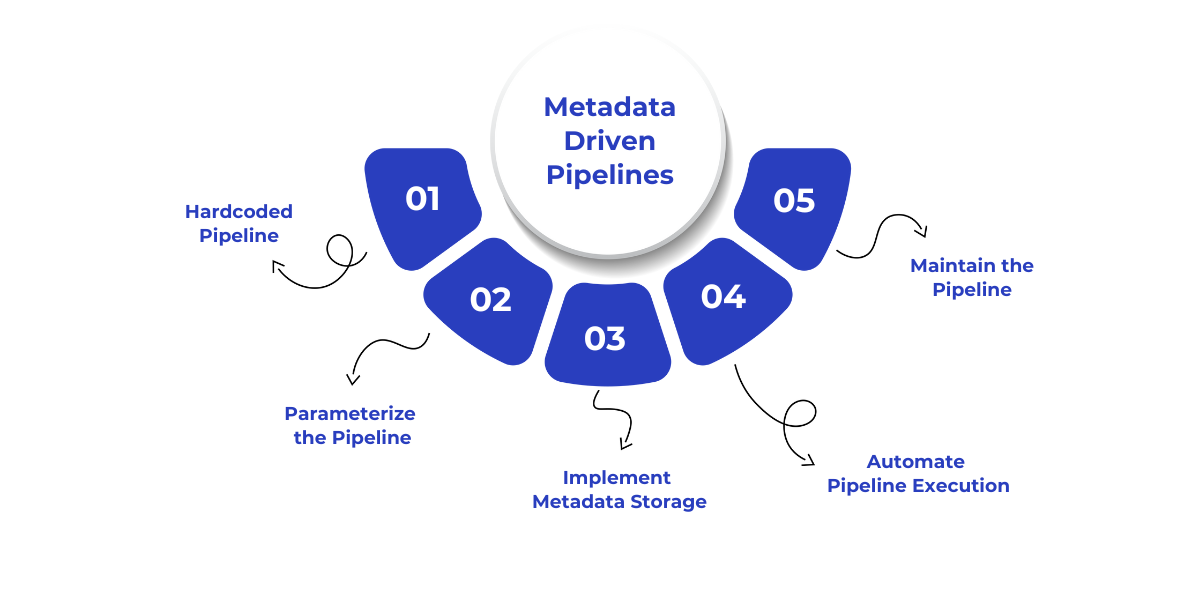

Steps to Implement Metadata-Driven Pipelines in Microsoft Fabric

Building metadata-driven pipelines in Microsoft Fabric allows organizations to automate and scale their data integration processes efficiently. Here's a practical guide to implementing these pipelines:

1. Start with a Simple, Hardcoded Pipeline

Begin by creating a straightforward pipeline that copies a single table from a source to a destination, such as a data lakehouse. This initial step helps validate connections and configurations before introducing complexity.

- Create a Pipeline: In Microsoft Fabric, navigate to the Data Factory persona and create a new pipeline. Select the "Copy data" activity to initiate the process.

- Configure Source and Destination: Set up the source connection (e.g., Azure SQL Database) and choose the specific table to copy. For the destination, select your data lakehouse and specify the target location.

- Test the Pipeline: Run the pipeline to ensure that data is transferred correctly, and connections are functioning as expected.

Starting with a simple, hardcoded pipeline allows you to troubleshoot and confirm the basic setup before scaling.

2. Parameterize the Pipeline

Once the basic pipeline is working, introduce parameters to make it dynamic and reusable for multiple tables.

- Define Parameters: Create parameters for elements like server name, database name, schema, table name, and destination path.

- Modify Pipeline Activities: Replace hardcoded values in the source and destination configurations with the defined parameters.

- Test Parameterization: Execute the pipeline with different parameter values to ensure it can handle various tables and destinations.

Parameterizing the pipeline enables you to reuse the same pipeline logic for different datasets, reducing redundancy.

3. Implement Metadata Storage

To manage multiple tables efficiently, store metadata in a structured format, such as a JSON file or a database table.

- Design Metadata Structure: Define a schema that includes information like source and destination details, transformation rules, and scheduling information.

- Populate Metadata: Fill the metadata store with entries for each table you wish to process, including all necessary details.

- Access Metadata in Pipeline: Modify the pipeline to read from the metadata store and iterate over the entries, dynamically processing each table based on its metadata.

Using a metadata store centralizes configuration and makes it easier to manage and update pipeline logic.

4. Automate Pipeline Execution

Enhance the pipeline's automation by incorporating activities that handle multiple tables sequentially.

- Use "For Each" Activity: Implement the "For Each" activity to loop through each metadata entry and execute the pipeline logic for each table.

- Configure Activities: Inside the loop, add activities like "Lookup" to retrieve metadata for each table and "Copy Data" to transfer the data accordingly.

- Set Up Monitoring: Implement logging and error handling to monitor the execution of each iteration and handle any issues that arise.

Automation ensures that the pipeline can process numerous tables without manual intervention, improving efficiency.

5. Optimize and Maintain the Pipeline

After setting up the pipeline, focus on optimization and maintenance to ensure long-term success.

- Monitor Performance: Use Microsoft Fabric's monitoring tools to track pipeline performance and identify bottlenecks.

- Implement Error Handling: Set up retries and error notifications to handle failures gracefully.

- Update Metadata: As new tables are added or existing ones change, update the metadata store to reflect these modifications.

- Review and Refine: Periodically review the pipeline logic and metadata structure to incorporate improvements and adapt to evolving requirements.

Regular optimization and maintenance keep the pipeline running smoothly and adaptable to changes.

Conclusion

Implementing metadata-driven pipelines in Microsoft Fabric is a strategic approach to modernizing your data infrastructure. By leveraging metadata, organizations can automate data workflows, enhance scalability, and ensure consistent data governance.

At WaferWire, we specialize in helping businesses build, optimize, and scale their data pipelines. Whether you're just starting with Microsoft Fabric or need expert guidance in implementing complex metadata-driven workflows, our team of experts is here to support you every step of the way. We offer comprehensive services, including:

- Data Estate Modernization: Transforming legacy systems to modern, cloud-based architectures.

- Metadata Management: Implementing tools and strategies to effectively manage and utilize metadata.

- Data Integration: Seamlessly integrating data from various sources into a unified platform.

- Governance and Compliance: Ensuring data processes adhere to industry standards and regulations.

If you're ready to unlock the full potential of your data with metadata-driven pipelines, contact WaferWire today. Let us help you navigate the complexities of Microsoft Fabric and build a data architecture that drives informed decision-making and business growth.

FAQs

1. How does metadata-driven automation reduce operational costs in data pipelines?

By automating routine tasks like data validation and transformation, metadata-driven pipelines eliminate the need for manual intervention, reducing labor costs and improving overall operational efficiency.

2. What are the common challenges businesses face when integrating metadata into their data pipelines?

Businesses often face challenges like data inconsistency across platforms, the complexity of mapping legacy systems to modern metadata formats, and the need for specialized tools to manage and automate metadata workflows.

3. How can metadata be used to improve data accessibility in distributed systems?

Metadata helps standardize data formats and structures, making it easier to access and query data across distributed systems. It also enables the integration of data from different sources, providing a unified view of information.

4. What are the key benefits of using metadata to track and manage data transformations in a pipeline?

Tracking transformations via metadata provides greater transparency into data processing, enabling businesses to monitor the success of data operations, identify bottlenecks, and ensure that data is transformed consistently across pipelines.

5. Can metadata-driven pipelines be adapted to handle unstructured data?

Yes, metadata can be used to categorize and define unstructured data, such as text, images, or videos, allowing businesses to process it alongside structured data and apply the same automation and governance principles.

Subscribe to Our Newsletter

Get instant updates in your email without missing any news

Copyright © 2025 WaferWire Cloud Technologies

.png)