Microsoft Fabric Basics: Iceberg Integration Guide

Mownika

3rd Oct 2025

Talk to our cloud experts

Subject tags

Enterprises today face a complex challenge: leveraging massive volumes of data while keeping costs under control, ensuring compliance, and maintaining agility. Traditional data warehouses and fragmented cloud solutions often struggle to keep pace with these demands.

Microsoft Fabric, Microsoft’s unified analytics SaaS platform, addresses these challenges by combining data engineering, governance, AI, and business intelligence in a single solution. When paired with Apache Iceberg, an open table format designed for large and evolving datasets, Fabric becomes a future-ready lakehouse architecture that strikes a balance between performance, compliance, and scalability.

Brief breakdown:

- Microsoft Fabric provides a unified, governed analytics platform.

- Apache Iceberg adds scalability, openness, and transaction reliability.

- Together, they create a robust lakehouse architecture that reduces costs, accelerates insights, and future-proofs data strategies.

- WaferWire enables organizations to adopt Fabric + Iceberg effectively, ensuring alignment with business and compliance priorities.

Microsoft Fabric in Context

Modern enterprises manage fragmented data landscapes, including structured records in relational databases, unstructured files in data lakes, real-time streams from IoT devices, and operational data scattered across CRM and ERP systems. This fragmentation creates bottlenecks for analytics, slows down decision-making, and drives up IT costs.

Microsoft Fabric addresses this challenge by providing a unified, end-to-end analytics platform built on a Software-as-a-Service (SaaS) foundation. Unlike traditional architectures that require multiple disjointed tools for ingestion, transformation, storage, and visualization, Fabric integrates all these capabilities into a single environment.

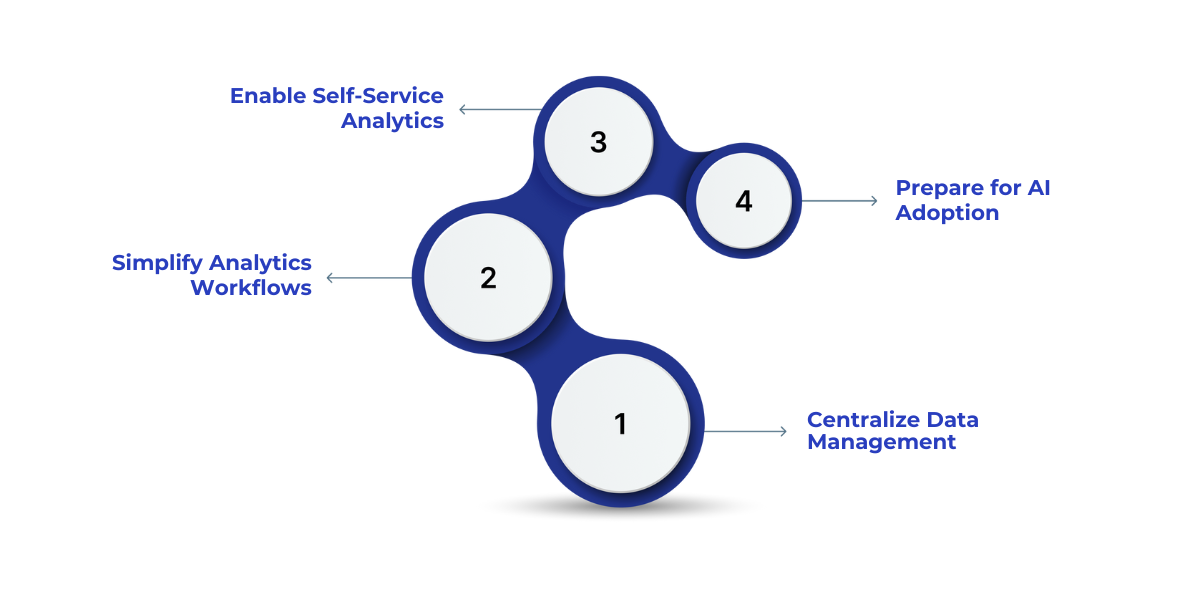

At its core, Fabric combines the strengths of Azure Synapse, Power BI, Data Factory, and AI-driven services, all accessible under one umbrella. This integration enables organizations to:

- Centralize data management – ensuring consistency across business units and compliance frameworks.

- Simplify analytics workflows – from ingestion to reporting - by reducing reliance on patchwork solutions.

- Enable self-service analytics – empowering business users to explore data without heavy IT intervention.

- Prepare for AI adoption – with built-in support for advanced analytics and machine learning models.

The result is an ecosystem where both technical teams and business leaders gain access to trusted, actionable insights without the complexity of stitching together multiple platforms.

Apache Iceberg: The Open Table Advantage

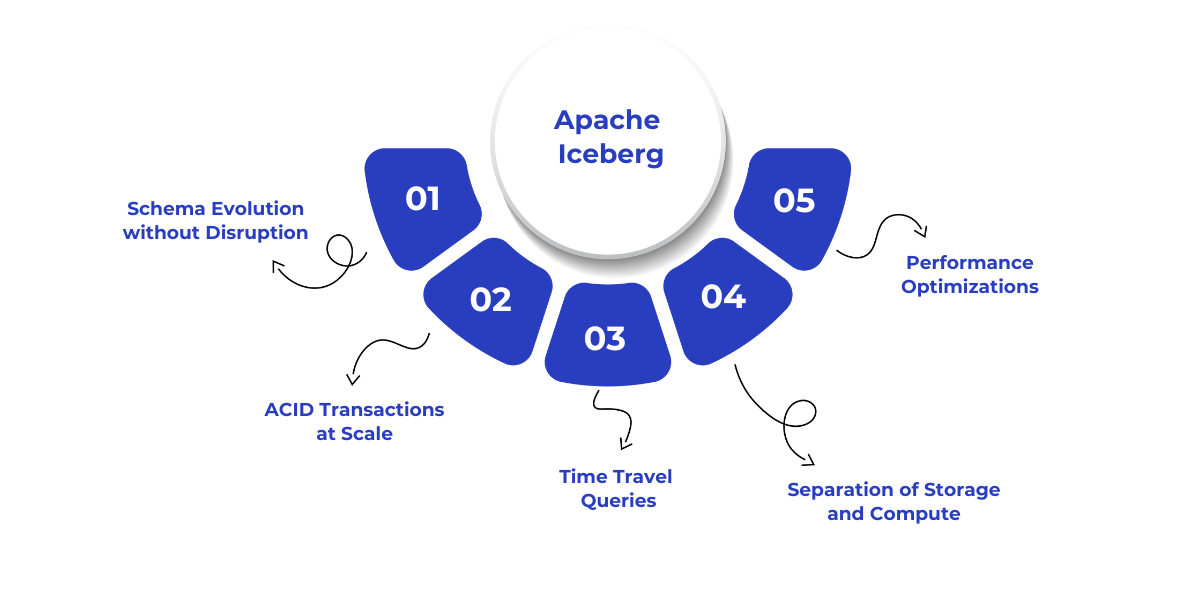

Apache Iceberg is an open table format built to handle massive analytic datasets while addressing the shortcomings of legacy approaches. It introduces a layer of abstraction that treats tables in a data lake with the same reliability, consistency, and governance features traditionally associated with enterprise data warehouses.

Key advantages include:

- Schema evolution without disruption – Iceberg supports adding, dropping, or renaming columns without breaking downstream pipelines, making it easier to adapt data structures as business requirements change.

- ACID transactions at scale – By ensuring atomicity, consistency, isolation, and durability, Iceberg provides the reliability required for concurrent data operations across large teams and systems.

- Time travel queries – Businesses can query historical snapshots of data, enabling powerful use cases like regulatory audits, root cause analysis, and performance trend evaluations.

- Separation of storage and compute – Iceberg’s design enables organizations to leverage multiple processing engines (such as Spark, Trino, and Flink) without being tied to a single vendor or compute environment.

- Performance optimizations – Features such as hidden partitioning and metadata management reduce query latency and optimize resource usage, resulting in cost savings at scale.

Why Fabric + Iceberg Integration is Transformative?

Integrating Iceberg with Fabric creates a robust lakehouse architecture that brings together the governance and AI capabilities of Fabric with the openness and scalability of Iceberg.

Business Impact

- Unified Data Estate – A single, governed source of truth across operations and analytics.

- Optimized TCO – Decoupled compute and storage to minimize unnecessary cloud spend.

- Faster Insights – Support for large-scale analytics accelerates decision cycles.

- Regulatory Assurance – Audit-ready governance reduces compliance risks.

- Future-Proof Architecture – AI-ready infrastructure that adapts to emerging needs.

This integration bridges the gap between technical agility and business strategy, enabling seamless integration of technical solutions with business objectives.

Technical Steps to Iceberg Integration in Fabric

Integrating Apache Iceberg with Microsoft Fabric enables enterprises to unlock the benefits of an open table format within a unified analytics platform. While the implementation varies based on specific business and infrastructure needs, the process generally follows these structured steps:

1. Set up the storage layer

Iceberg tables are typically stored in cloud object storage (e.g., Azure Data Lake Storage or Amazon S3). This layer serves as the foundation for managing data files and metadata in a scalable and cost-efficient manner.

2. Enable iceberg-compatible engines

Fabric works seamlessly with engines such as Spark, Synapse, and SQL endpoints, which are capable of reading and writing Iceberg tables. Ensuring that these engines are properly configured is crucial for seamless integration.

3. Create and register iceberg tables

Using SQL or Spark commands, organizations can define Iceberg tables directly on top of their data lake. Once registered, these tables become accessible across the Fabric environment, enabling consistent use by analysts and data scientists.

4. Ingest and transform data

With Data Factory or Spark pipelines in Fabric, raw data can be ingested into Iceberg tables. Transformations such as cleaning, enrichment, or partitioning are handled in a way that maintains Iceberg’s schema flexibility and ACID guarantees.

5. Leverage time travel and versioning

Once the tables are active, Fabric users can leverage Iceberg’s advanced capabilities, including time travel queries for historical insights and schema evolution to accommodate business changes without requiring re-engineering of pipelines.

6. Integrate with downstream analytics

Iceberg tables integrate seamlessly with Fabric’s Power BI and Synapse components, enabling visualization, reporting, and advanced analytics while maintaining governance integrity.

7. Monitor, optimize, and govern

Finally, ongoing management is essential. Fabric provides centralized monitoring, security, and governance tools to ensure Iceberg tables remain performant, compliant, and cost-effective over time.

By following these steps, organizations can establish a future-ready analytics ecosystem that combines the openness and flexibility of Iceberg with the simplicity, governance, and scalability of Microsoft Fabric.

Practical Use Cases

The Fabric–Iceberg integration delivers value across industries by turning complex data into actionable insights:

- Financial services – Utilize time travel queries for faster audits and enhance compliance.

- Retail & e-commerce – Enable real-time personalization, dynamic pricing, and accurate demand forecasting.

- Healthcare – Unify fragmented patient data for predictive analytics and better treatment outcomes.

- Manufacturing – Leverage IoT data for predictive maintenance and improved quality control.

- Telecommunications – Monitor network performance at scale, optimize bandwidth, and reduce outages.

- Energy & Utilities – Strengthen ESG reporting with trustworthy, audit-ready sustainability metrics.

Trends to Watch in Fabric + Iceberg Integration

The combination of Microsoft Fabric and Apache Iceberg is shaping modern enterprise data strategies. Organizations adopting this integration should be aware of the key trends that are likely to define its impact in the coming years:

1. AI-Driven Analytics and Decisioning

With Fabric’s AI and Copilot capabilities combined with Iceberg’s reliable, structured datasets, organizations are moving beyond descriptive analytics to predictive and prescriptive insights. This allows faster decision-making, automated forecasting, and intelligent anomaly detection across business operations.

2. Real-Time and Streaming Analytics

Increasingly, enterprises require insights from continuous data streams, whether from IoT devices, financial transactions, or customer interactions. Iceberg’s support for concurrent writes and Fabric’s processing engines enable near real-time analytics, empowering more agile responses to business events.

3. Hybrid and Multi-Cloud Architectures

Many enterprises are adopting hybrid or multi-cloud strategies to avoid vendor lock-in and optimize costs. Iceberg’s open table format ensures data portability, while Fabric’s SaaS architecture allows seamless access to datasets across cloud environments without compromising governance or performance.

4. Secure Data Collaboration Across Ecosystems

Data sharing between business units, partners, or regulatory bodies is becoming critical. Fabric + Iceberg integration enables secure, governed data collaboration, allowing stakeholders to access consistent datasets while maintaining privacy and compliance.

5. Governance and Compliance by Design

With regulations such as HIPAA, SOX, and CCPA, as well as emerging ESG reporting requirements, enterprises require audit-ready, traceable, and compliant data architectures. Fabric’s governance capabilities, combined with Iceberg’s ACID compliance and time-travel features, provide a strong foundation for regulatory adherence.

How WaferWire Enables Fabric + Iceberg Success?

Integrating Fabric and Iceberg is more than a technical implementation; it requires a strategy that aligns data platforms with business objectives. This is where WaferWire Cloud Technologies adds value.

WaferWire’s Expertise

- Microsoft Partner Advantage – Extensive experience in deploying Fabric within enterprise environments.

- Data Estate Modernization – Migration from legacy platforms to modern, cloud-native architectures.

- Custom Lakehouse Designs – Tailored Iceberg integration aligned with performance, compliance, and cost goals.

- Governance-First Methodology – Ensures compliance without compromising agility.

- End-to-End Services – From strategy and implementation to managed optimization.

At WaferWire, our experts specialize in helping businesses of various sizes implement, customize, and optimize Microsoft Fabric. Whether you're setting up your environment or integrating tools like Power BI, we provide the support you need to enhance your data strategy.

Contact WaferWire today to elevate your data transformation processes!

FAQs

1. How long does it take to see ROI from Fabric + Iceberg adoption?

Most organizations begin to realize benefits, such as cost reduction and faster insights, within 9 to 18 months, depending on the scale of the migration.

2. Will this disrupt existing BI tools?

No. Fabric enhances BI investments by providing larger, cleaner, and more reliable datasets, particularly for Power BI.

3. How does this integration impact compliance audits?

The combination of Fabric’s governance and Iceberg’s ACID compliance provides traceability, reducing audit preparation time and risk.

4. What operational changes are required?

Organizations often need data engineering and governance expertise. WaferWire can provide both advisory and managed services to bridge these gaps.

5. Is this approach scalable for future AI initiatives?

Yes. Fabric + Iceberg provides the foundation for training and deploying enterprise AI models, enabling predictive and prescriptive analytics at scale.

Subscribe to Our Newsletter

Get instant updates in your email without missing any news

Copyright © 2025 WaferWire Cloud Technologies

.png)